I’ve been mulling over a potential Microsoft Connect item regarding selectivity guess warnings, but I’m uncertain if it would have enough demand to drive product team attention. Selectivity guesses, which I talked a little about in the “Selectivity Guesses in absence of Statistics” post, are sometimes referred to as “magic numbers”, “heuristics” or just plain old QO guesses.

When a guess occurs, I think it would be interesting\helpful to know when it happens, especially considering the impact on query plan quality. And while I’ve seen a high number of SQL Server instances over the last 16 years, I couldn’t tell you how many had bad plans due to selectivity guesses. Assuming that there are supporting statistics – one would hope that selectivity guesses at the leaf-level of a plan should be infrequent. And yet I feel it would be nice to confirm this through an exposed mechanism when it happens at the non-leaf levels. For example – just knowing when the Query Optimizer says “ I don’t know what estimate to use for this Filter, so I’ll use a selectivity guess and put a warning in the plan.”

In theory, one could comb a plan’s XML and pull out the estimates vs. cardinality of the child operator (or if the leaf-level, the table cardinality). Then those numbers could be evaluated for potential matches on known patterns.

And before we begin, I just want to confirm that this is not to be considered an actual real-world solution. I’ll explain why later – but in the meantime just view these demo queries as a rough draft thrown together for use in exploring a proof-of-concept.

Let’s say you have a table that is missing statistics entirely – and auto-creation of statistics are disabled. This is an upstream issue that we know has a fix – but in this scenario, it has not been addressed. Now let’s take a sprawling query example that hits a few different patterns that would generate known selectivity guesses in absence of statistics:

SELECT [charge_no],

[member_no],

[provider_no],

[category_no],

SUM([charge_amt]) AS [total_charge_amt]

FROM (SELECT [charge_no],

[member_no],

[provider_no],

[category_no],

[charge_dt],

[charge_amt],

[statement_no],

[charge_code]

FROM dbo.[charge_guess]

WHERE [provider_no] = 500

UNION

SELECT [charge_no],

[member_no],

[provider_no],

[category_no],

[charge_dt],

[charge_amt],

[statement_no],

[charge_code]

FROM dbo.[charge_guess]

WHERE [statement_no] BETWEEN 500 AND 1000

UNION

SELECT [charge_no],

[member_no],

[provider_no],

[category_no],

[charge_dt],

[charge_amt],

[statement_no],

[charge_code]

FROM dbo.[charge_guess]

WHERE [charge_amt] < 100000

UNION

SELECT [charge_no],

[member_no],

[provider_no],

[category_no],

[charge_dt],

[charge_amt],

[statement_no],

[charge_code]

FROM dbo.[charge_guess]

WHERE [category_no] LIKE 10) AS t

GROUP BY [charge_no],

[member_no],

[provider_no],

[category_no]

HAVING SUM([charge_amt]) > 3999.99;

GO

Now that this is in cache, let me extract the different operators and associated attributes into a temporary table:

WITH XMLNAMESPACES

(DEFAULT 'http://schemas.microsoft.com/sqlserver/2004/07/showplan')

SELECT s.[value]('@NodeId', 'int') AS [NodeId],

s.[value]('@PhysicalOp', 'nvarchar(128)') AS [PhysicalOp],

s.[value]('@EstimateRows', 'real') AS [EstimateRows],

s.[value]('@TableCardinality', 'real') AS [TableCardinality],

c.[plan_handle],

q.[query_plan],

t.text

INTO #ProcessResults

FROM sys.dm_exec_cached_plans AS [c]

CROSS APPLY sys.dm_exec_query_plan(plan_handle) AS [q]

CROSS APPLY query_plan.nodes('//RelOp') AS batch(s)

CROSS APPLY sys.[dm_exec_sql_text](c.plan_handle) AS [t]

WHERE t.text LIKE '%provider_no%'

OPTION(MAXDOP 1, RECOMPILE);

GO

Next – let me look at a few common selectivity guess percentages:

-- Common guesses - not all inclusive! SELECT plan_handle, NodeId, PhysicalOp, EstimateRows AS EstimateRows, COALESCE(TableCardinality, LAG(EstimateRows, 1,0) OVER (ORDER BY NodeId DESC)) AS Cardinality, CASE CAST(EstimateRows / COALESCE(TableCardinality, LAG(EstimateRows, 1,0) OVER (ORDER BY YEAR(NodeId))) AS numeric (24,4)) WHEN 0.0316 THEN 'Potential Selectivity Guess' WHEN 0.09 THEN 'Potential Selectivity Guess' WHEN 0.30 THEN 'Potential Selectivity Guess' WHEN 0.10 THEN 'Potential Selectivity Guess' ELSE '-' END AS 'Potential Selectivity Guess?' FROM [#ProcessResults] AS pr ORDER BY NodeId DESC;

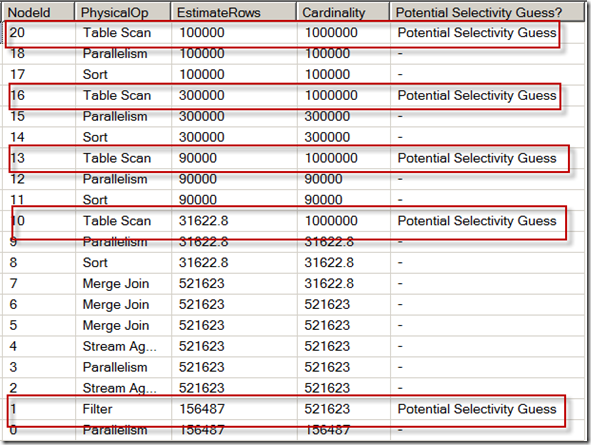

Looking at the results, I can see a few patterns for applicable operators that may be doing selectivity guesses:

So the demo query found where the guesses were originating at the leaf level for table scans due to four different expression patterns – and also caught a potential selectivity guess for the Filter operator further down towards the root of the plan.

This solution is imperfect though:

- I’m just pulling a few different known selectivity guess percentages. I don’t know all the scenarios and I’m definitely missing some of them. Not to mention the fact that some of the potential predicates would involve more sophisticated evaluation in order to detect potential selectivity guesses.

- Each Table Scan also had a “Columns With No Statistics” warning (at Node 20, 16, 13, and 10). I would argue that this would be a good red flag in and of itself.

So why even raise this discussion in the first place?

- While the “Columns With No Statistics” warning surfaces for my table access, it didn’t surface for the Filter operator (Node 1).

- There are scenarios in the intermediate levels of a plan where I would love to know when the query optimizer has resorted to pure guesses.

Again, I’m not entirely sure a Connect item would be anything but a symbolic act – and perhaps justly so – but I’m curious to hear from those of you who have confirmed selectivity guess issues for their plans – and most particularly for scenarios where there aren’t other symptoms (for example, no associated warnings that might tip you off to an upstream issue).

3 thoughts on “Detecting Selectivity Guesses”

Interesting stuff. Assuming all tables in a database have just had indexes rebuilt and all tables have a clustered index, all stats should be as up to date and as complete as possible. In such a case, if the query grant requests are far larger than the the query memory actually used, the only things I can think of to bloat the query memory grant requests would be missing indexes, selectivity guesses, optimizer timeouts, or the lack of some needed rules like those in trace flag 2301.

Am I on the right track? This is a test system situation I am experiencing right now… by using resource governor override and setting query memory limit to 5% instead of default we reached a higher level of query concurrency. Counter-intuitive 🙂

Thanks Lonny,

Regarding excessive query memory grants – look for major skews between estimated rows and actual rows for the memory-requiring operators in the plan. If the row count estimate is higher for that memory-requiring operator, then yes, you could correlate the memory grant to that inflated row count. And if the row count is inflated, this could be caused by a number of reasons (stats, predicate complexity, etc.). But otherwise I do see natural differences between desired/required memory grant sizes. The calculation for desired/required isn’t public as far as I’ve been able to see.

And regarding your RG test – given the memory grant requirements and semaphores, I could actually see this helping query concurrency. Are you seeing it have an influence on the actual memory grant sizes in your tests?

In the database we have auto create, auto update, auto update async stats. No indexes with rorecompute stats. Some huge tables, some small, lots of midsize. Over 10 thousand tables 🙂 The stats that exist are perfect – all indexes were rebuild and a fullscan on stats update also (this was partly to test differences between hard drives and SSD for maintenance tasks like stats update, index rebuild, dbcc checkdb.

So bad existing stats don’t seem like they could be an issue.

We have, unfortunately, massively complex predicates.

The RG testing actually did show large changes in query grant size. We had some queries previously requesting 7.5 gb of query memory (not every using it per perfmon grated workspace memory trended against stolen memory), they’re requesting well under 500 mb now. We’ll start looking at the query plans to see if we’ve forced query plan changes or just forced a better fit between actual query memory and query memory grant.

Comments are closed.