For Day 9 of this series, I want to talk about processor cache size and its relationship to SQL Server 2012 performance.

Cache Size and the Importance of the L2 and L3 Caches

All Intel-compatible CPUs have multiple levels of cache. The Level 1 (L1) cache has the lowest latency (i.e. the shortest delays associated with accessing the data), but the least amount of storage space, while the Level 2 (L2) cache has higher latency, but is significantly larger than the L1 cache. Finally, the Level 3 (L3) cache has the highest latency, but is even larger than the L2 cache. In many cases, the L3 cache is shared among multiple processor cores. In older processors, the L3 cache was sometimes external to the processor itself, located on the motherboard.

Whenever a processor has to execute instructions or process data, it searches for the data that it needs to complete the request in the following order:

1. internal registers on the CPU

2. L1 cache (which could contain instructions or data)

3. L2 cache

4. L3 cache

5. main memory (RAM) on the server

6. any cache that may exist in the disk subsystem

7. actual disk subsystem

The further the processor has to follow this data retrieval hierarchy, the longer it takes to satisfy the request, which is one reason why cache sizes on processors have gotten much larger in recent years. Table 1 shows the typical size and latency ranges for these main levels in the hierarchy.

| L1 Cache | L2 Cache | L3 Cache | Main Memory | Disk |

| 32K size | 256K size | 20MB size | 256GB size | Terabyte size |

| 2ns latency | 4ns latency | 6ns latency | 50ns latency | 15ms latency |

Table 1: Data Retrieval Hierarchy for a Modern System

For example, on a new server using a 22nm Intel Ivy Bridge processor, you might see an L1 cache latency of around 2 nanoseconds (ns), L2 cache latency of 4 ns, L3 cache latency of 6 ns, and main memory latency of 50 ns. When using traditional magnetic hard drives, going out to the disk subsystem will have an average latency measured in milliseconds. A flash based storage product (like a Fusion-io card) would have an average latency of around 25 microseconds. A nanosecond is a billionth of a second; a microsecond is a millionth of a second, while a millisecond is a thousandth of a second. Hopefully, this makes it obvious why it is so important for system performance that the data is located as short a distance down this retrieval chain as possible.

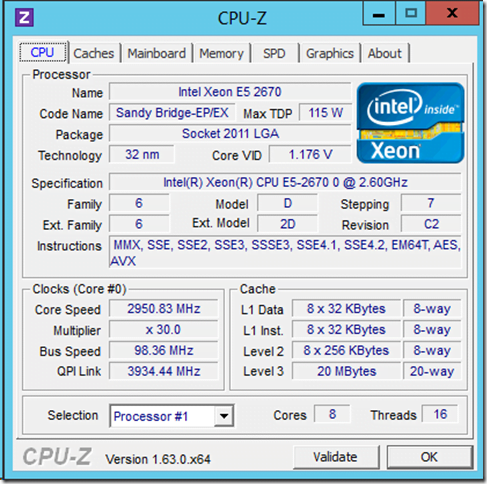

The performance of SQL Server, like most other relational database engines, has a huge dependency on the size of the L2 and L3 caches. Most processor families will offer processor models with a range of different L2 and L3 cache sizes, with the cheaper processors having smaller caches and, where possible, I advise you to favor processors with larger L2 and L3 caches. Given the business importance of many SQL Server workloads, economizing on the L2 and L3 cache size is not usually a good choice. Figure 1 shows information about the caches in an Intel Xeon E5-2670 processor in the bottom right corner.

Figure 1: CPU-Z Showing Cache Size Information

If the hardware budget limit for your database server dictates some form of compromise, then I suggest you opt to initially economize on RAM in order to get the processor(s) you really want. My experience as a DBA suggests that it’s often easier to get approval for additional RAM, at a later date, than it is to get approval to upgrade a processor. Most of the time, you will be “stuck” with the original processor(s) for the life of the database server, so it makes sense to get the one you need when you first buy the server.

You do have to be keenly aware of your total physical core counts as you select a processor for SQL Server 2012 Enterprise Edition, since you will have to pay for each core license.

2 thoughts on “A SQL Server Hardware Tidbit a Day – Day 9”

Glenn, great series.

On this one, I’d add that the closer cache levels are becoming increasingly more important in two major ways: NUMA and the emergence of technologies such as Bw-trees.

With both, if we can keep the workload on the same processor group into which the data were loaded originally (e.g. cache pages), we are guaranteed the fastest possible access. Causing different data sets to be moveded in and out of the cache or causing “foreign” data transfers from one CPU group to another all limit us.

The NUMA setup and CPU affinity options for SQL are really key here. Also, with both VMware and Hyper-V, you can now give a VM NUMA awareness and affinity. All important things to consider!

Not sure if CPU-Z gives you the same output somewhere, but coreinfo.exe from sysinternals definitely helps in identifying the NUMA-ness of an architecture, particularly when run from inside a VM.

FYI – the link to the Bw-tree paper isn’t particular visible, so click here for more info: Bw-tree paper from Microsoft Research.