This post is a response to this month’s T-SQL Tuesday #118 prompt by Kevin Chant. T-SQL Tuesday is a way for the SQL Server Community to share ideas about different database and professional topics every month. This month’s topic asks about our fantasy SQL Server feature.

Introduction

It may come as no surprise, but my fantasy SQL Server feature is related to hardware and storage. This is a general idea that I have had for many years, that I have brought up informally with some fairly senior people at Microsoft in the past.

Essentially, I think it would be very useful if SQL Server had some sort of internal benchmarking/profiling utility that could be run so that SQL Server could measure the relative and actual performance of the hardware and storage that it was running on. Then, the results of these tests could be used to help the SQL Server Query Optimizer make better decisions about what sort of query plan to use based on that information.

For example, depending on the actual measured performance of a processor core (and the entire physical processor) from different perspectives, such as integer performance, floating point performance, AVX performance, etc., it might make more sense to favor one query operator over another for certain types of operations. Similarly, knowing the relative and actual performance of the L1, L2, and L3 caches in a processor might be useful in the same way.

Going deeper into the system, knowing the relative and actual performance of the DRAM main memory (and PMEM) in terms of latency and throughput seems like it would be useful information for the Query Optimizer to know about. Finally, understanding the relative and actual performance of the storage subsystem in terms of latency, IOPs, and sequential throughput would probably pretty useful in some situations.

Windows Experience Index

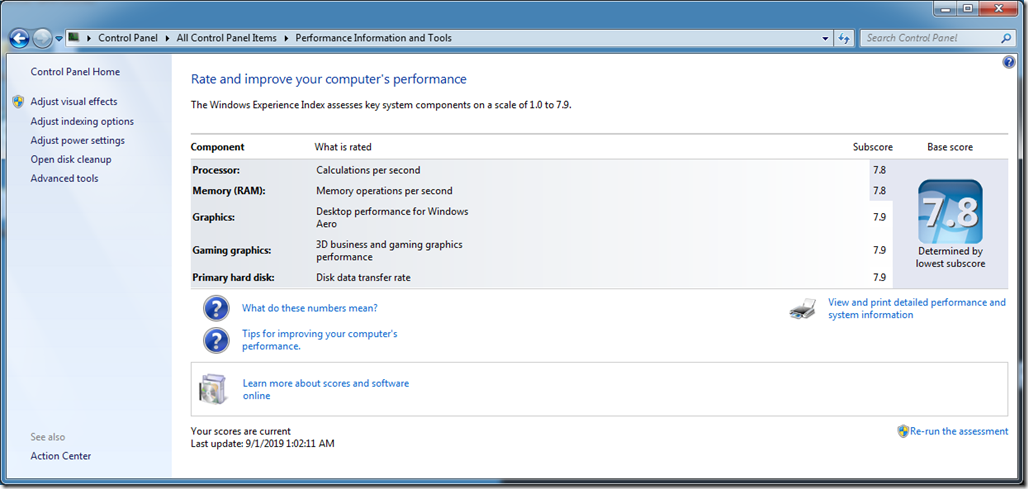

A historical, consumer-facing example was the old Windows Experience Index in Windows 7, that would run a quick series of benchmarks to measure the processor, memory, graphics, gaming graphics, and primary hard disk performance of a system. The resulting scores (in Windows 7) could range from 1.0 to 7.9. The scores for my old 2012-vintage Intel Core i7-3770K desktop system are shown in Figure 1. The purpose of these scores (beyond bragging rights) was to help consumers make better decisions about possible upgrade choices for different system components or to simply understand what the biggest bottlenecks were in their existing system. It was also used as a quick way to compare the relative performance of different systems in a store.

Figure 1: Windows Experience Index Scores on Windows 7

Azure Experience Index

My idea is to have something similar from a SQL Server perspective, that could optionally be used by the SQL Server Query Optimizer (and any other part of SQL Server) to make better decisions based on the actual, measured performance of the key components of the system it is running on. This would be useful no matter how SQL Server was deployed, whether it was running bare-metal on-premises, in an on-premises VM, in a Container, in an Azure VM, in Azure SQL Database, or in SQL Managed Instance. It would also work in any other cloud environment, and on any supported operating system for SQL Server.

Despite what you might hear, the details of the hardware and storage of the actual hardware that your SQL Server deployment is running on, make a significant difference to the performance and scalability you are going to see. Low-level geeky things like the exact processor (and all of its performance characteristics), the exact DRAM modules (and their number and placement), the NUMA layout, the exact type and configuration of your storage, your BIOS/UEFI settings, your hypervisor settings, etc. There are so many possible layers and configuration options in a modern system, it can be quite overwhelming.

Based on source code-level knowledge of what SQL Server does in general and how the Query Optimizer works, along with all of the performance telemetry that is collected by Azure SQL Database, Microsoft should be able to determine some relationships and tendencies that tie the actual measured performance of the main components of a system (as it is currently configured as a whole) to common SQL Server query operations.

For example, as an imaginary possibility, perhaps the performance of a hash match is closely related to integer CPU performance, along with L1, L2, and L3 cache latency, DRAM latency and throughput. Different systems, based on differences in the exact CPU, BIOS settings, memory type and speed, etc. might have significantly different performance for a hash match, to the point that the Query Optimizer would want to take that into account when choosing the operators for a query plan. Perhaps it could be called Hyper-Adaptive Cognitive Query Processing… ![]()

Even if that level of tuning wasn’t immediately possible, having a deeper understanding of what specific performance characteristics were the most critical to common query operations for different query workloads would help Microsoft make better decisions on what processor, memory, and storage specifications and configuration settings work the best together for different workloads. This could potentially save huge amounts of money in Azure Data Centers. Microsoft can custom design/build hardware as part of the Open Compute Project, and they can get custom server processor SKUs with their desired performance characteristics from AMD and Intel to take advantage of this type of knowledge. They can also configure everything else in the system just as they desire for a particular workload.

Obviously, this is a complicated idea that would take significant resources to develop. I’m sure that Microsoft has other development priorities, but this is my fantasy feature, and I’m sticking with my story.

3 thoughts on “T-SQL Tuesday #118 My Fantasy SQL Server Feature”

I like this idea.

Compare the I/O and CPU to the late 1990’s workstation that the optimizer bases scores on.

So todays disk performance is 20 times better than the old one say. Now your disk performance should score at 5% of the baseline. The cost threshold would have to be adjusted too to reflect the better hardware than late 1990″s

Thanks! Microsoft says that it uses a “cost-based optimizer”, so having a more accurate understanding of what different operations actually cost on the system you are running on seems like it would be a good idea, at least to me.

It might get a little more complicated with the Intel bugs meaning that hyper-threading could be off or on but I’m sure a good scanning would bring up your servers performance level scores for I/O and CPU. The cost threshold adjustment could become a bit of a crapshoot. If you happen to be under say VMWare and thru VMotion got moved you would need a re-scanning.

I’m sure it could be done. Microsoft Tiger Team have many talented people