In last week’s post I discussed the basics of how automatic updates to statistics occur in SQL Server. This week I want to talk about scheduled (aka manual) updates, because as you might remember, we really want to control when statistics are updated.

In terms of updating statistics you have multiple options, including:

- Update Statistics Task (Maintenance Plan)

- sp_updatestats

- UPDATE STATISTICS

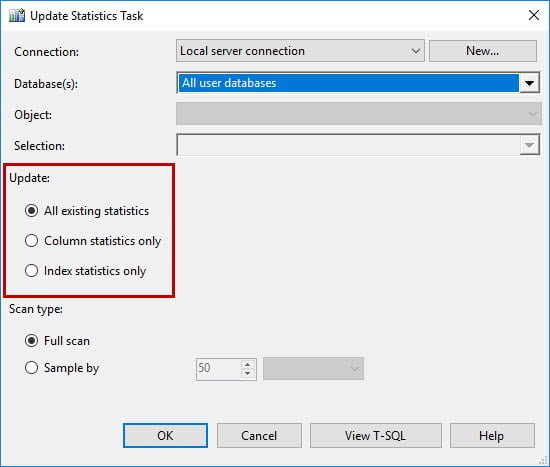

For systems that do not have a full-time DBA, one of the easiest methods for managing statistics is the Update Statistics Task. This task can be configured for all databases or certain databases, and you can determine what statistics it updates:

You might think you want to update All existing statistics. If you just had a plan with just this task, that might be true. But what I see most often is that someone configures the Rebuild Index task, and then has the Update Statistics task as the next step. In that case, if you are running SQL Server 2014 and below, you want to update Column statistics only. When you run the Rebuild Index task in SQL Server 2014, you rebuild all indexes, and when you rebuild an index, its statistic is updated with a fullscan. Therefore, there is no need to update Index statistics after you rebuild all your indexes, but you do need to update column statistics.

This is a bit more complicated in SQL Server 2016. The Rebuild Index task has more options in SQL Server 2016, which is nice for that specific task, but it makes providing guidance about statistics updates a bit trickier. In SQL Server 2016 you can configure the Rebuild Index task so that it only rebuilds an index if a certain level of fragmentation exists. Therefore, some of your indexes will rebuild (and thus have statistics updated) and some will not (and not have updated statistics). How do you manage that with the Update Statistics task? Well, in that case you probably select All existing statistics and update some statistics for a second time, which is really a waste. Therefore, if you’re on SQL Server 2016, you probably want to look at more intelligent updates.

One method, which I would not say is intelligent, but it is an option, is to use sp_updatestats in a scheduled job that runs on a regular basis. This command is one you run for a database, not for a specific statistic or index or table. The sp_updatestats command will only update statistics if data has changed. That sounds good, but the caveat is that only one (1) row has to have changed. If I have a table with 2,000,000 rows, and only 5 rows have changed, I really don’t need to update statistics.

The other method is to use UPDATE STATISTICS in a scheduled job. The UPDATE STATISTICS command can be run for individual statistics or for a table (updating all statistics for a table). You can develop an intelligent method to use this command, which is what I recommend. Rather than a blanket update to all statistics, or statistics where one row has changed, I prefer to update statistics that are outdated based on the amount of data that has changed. Consider the aforementioned table with 2,000,000 rows. If I let SQL Server update statistics automatically, I would need 400,500 rows to change. It’s quite possible that with a table of that size I would want to statistics to update sooner – say after 200,000 rows had changed, or 10% of the table.

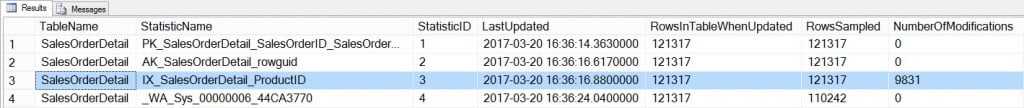

We can programmatically determine whether we need to update statistics using the sys.dm_db_stats_properties DMF. This DMF tracks modifications, and also tells us how many rows were in the table when statistics were last updated, and the date statistics were updated. For example, if I update some rows in Sales.SalesOrderDetail, and then look at the output from the DMF, you can see that the modification counter matches the number of rows I changed* for the ProductID index:

USE [AdventureWorks2012]; GO UPDATE [Sales].[SalesOrderDetail] SET [ProductID] = [ProductID] WHERE [ProductID] IN (921,873,712); GO SELECT [so].[name] [TableName], [ss].[name] [StatisticName], [ss].[stats_id] [StatisticID], [sp].[last_updated] [LastUpdated], [sp].[rows] [RowsInTableWhenUpdated], [sp].[rows_sampled] [RowsSampled], [sp].[modification_counter] [NumberOfModifications] FROM [sys].[stats] [ss] JOIN [sys].[objects] [so] ON [ss].[object_id] = [so].[object_id] CROSS APPLY [sys].[dm_db_stats_properties] ([so].[object_id], [ss].stats_id) [sp] WHERE [so].[name] = N'SalesOrderDetail'; GO

*You’re correct, I technically didn’t change ProductID to a new value, but SQL Server doesn’t know that. Also, there’s a foreign key on that column which is why I can’t easily change it a random number.

Armed with this type of data, we can intelligently decide whether we should update statistics because a percentage of rows (rather than just a fixed number of rows) have changed. In the example above, only 8% of data changed – probably not enough to require me to update statistics. It’s quite possible that some statistics need to be updated daily because there is a high rate of change, and other statistics only need to be updated weekly or monthly because data doesn’t change much at all.

Ultimately, when it comes to scheduled updates of statistics, you can go the sledgehammer route (Update Statistics task or sp_updatestats) or the selective update route (UPDATE STATISTICS and sys.dm_db_stats_properties). Using the Update Statistics task or sp_updatestats is easier if you’re not familiar with SQL Server and if you have the maintenance window and resources for it to run. To be perfectly clear: if you’re a system administrator and want to update statistics, I’d rather you use this approach than nothing at all. Presumably, if you don’t have a full-time DBA, you also don’t need the system to be available 24×7, so you can take the performance hit at night or on the weekend while all statistics update. In that situation I’m ok with the approach.

But, if you are a DBA and you know how to write T-SQL, then you can absolutely write some code that programmatically looks at your statistics and decides what to update and what to skip. Whatever method you use, just make sure your updates are scheduled to run regularly through an Agent Job, and make sure you have Auto Update Statistics enabled just in case the job doesn’t run and you don’t get notified for some reason (this would be Plan B, because it’s always good for DBAs to have a Plan B!).

Additional Resources

4 thoughts on “SQLskills SQL101: Updating SQL Server Statistics Part II – Scheduled Updates”

Thanks for the article Erin; good information, as always.

I notice that sys.dm_db_stats_properties() suffers from the same problem in SQL 2016 as it did in previous versions (and as sysindexes.rowmodctr did too) in that it tracks the number of row modifications rather than the number of distinct rows modified. (I call it a problem, because in my view it is, even though this behaviour is no doubt by design.) This makes it impossible to tell the difference between a single row in a million row table being updated a million times and every row in a million row table being updated once each; in the first instance you wouldn’t need to update the associated stats, and in the second instance you probably would. Without being able to distinguish between the two, it makes it near impossible to make wise decisions (in code) about whether or not to update specific column or index statistics.

Have you heard anything from Microsoft or in your professional circles that would suggest Microsoft are planning to address this behaviour anytime soon?

Cheers,

Mike

Hi Mike-

I don’t have any insight as to whether Microsoft will change the way it determines modifications. But, consider what would have to happen to track modifications *per* row. Right now SQL Server just tracks a change. What you’re suggesting would mean that it would have to track *which row* changed and how many times. How expensive is that? For a small system, not bad, but for a large, high-volume environment with thousands of transactions per second (and potentially thousands of modifications per second), you’re adding overhead by trying to monitor what row has changed. I think submitting a Connect item requesting the change is worthwhile, but I’ll be honest in that I don’t see that being implemented easily or soon. I understand that tracking *just* modifications may not be as reliable in terms of your stats updates, and then it comes down to knowing the application. If you have large tables where ONLY one row is updated, I’d be really interested to understand the logic there. Is this something you see frequently in the databases you support?

Erin

Erin

Thanks Erin,

This is exactly what I needed to replace the SQL2000 vintage update stats job on my servers. 😉

Of course the real problem is picking a trigger point. For example, I have a Partitioned View that contains rolling, date-based sub-tables. Each week a new table is created and added to the view. The table receives about 30GB of data in 225M Rows at a pretty constant rate 24X7. The query references are almost all Insert or Select (minimal updates -no deletes). After a few weeks the table is rolled out of the view, truncated, and dropped.

The data is keyed by a combination of a sort of device number (Int, TinyInt) (about 10,000 discrete devices) and a generally increasing datetime.

I am thinking of just tracking the #Rows in the sub-tables in the meta-data that controls this process and then update stats after ?? rows are added.

Any thoughts?

Ray-

With a table created each week, then you should just have to monitor that one for modifications, correct? If so, then yes, I would just look at the most recent one or two tables and look at modifications, and maybe do an update based on number of rows AND percent changed. Meaning, if you’re adding 225M total, you don’t want to update stats every 1 million rows. You want to update stats when a percentage of data has changed…and I would guess that over time, even if you change 10% of the data (22 million rows in a “worst case” scenario), you probably don’t need to update stats because unless something REALLY crazy happened, you’re not changing the distribution of data that much. So, when the first maybe 10-20 million rows are added, I expect you’d update more frequently than you would once you have 100 million rows. Does that make sense?

Erin