In this blog post we’ll explore columnstore index batch execution mode limits. I’m using SQL Server 2012 Developer Edition, version 11.0.3349 (SP1, CU3).

To start off with, I’ll create a table that uses six different supported nonclustered columnstore index data types:

USE [CS]; GO CREATE TABLE dbo.[FactContrived] ( col00 TINYINT NOT NULL DEFAULT 255, col01 SMALLINT NOT NULL DEFAULT 32767, col02 INT NOT NULL DEFAULT 2147483647, col03 BIGINT NOT NULL DEFAULT 9223372036854775807, col04 SMALLMONEY NOT NULL DEFAULT 214748.3647, col05 MONEY NOT NULL DEFAULT 922337203685477.5807); GO

I’ll populate this table with 1,048,576 rows, all using the same value for each row (contrived by-design):

SET NOCOUNT ON; GO INSERT dbo.[FactContrived] DEFAULT VALUES; GO INSERT dbo.[FactContrived] SELECT [col00], [col01], [col02], [col03], [col04], [col05] FROM dbo.[FactContrived]; GO 20

Next I’ll create a nonclustered columnstore index on each column in the table:

CREATE NONCLUSTERED COLUMNSTORE INDEX [NCI_FactContrived] ON dbo.[FactContrived] ([col00], [col01], [col02], [col03], [col04], [col05] ) WITH (MAXDOP = 1); GO

Once created, I’ll check the page count statistics with the following query:

SELECT [partition_id],

SUM([in_row_used_page_count]) AS [in_row_used_page_count],

SUM([lob_used_page_count]) AS [lob_used_page_count],

SUM([row_count]) AS [row_count]

FROM sys.[dm_db_partition_stats]

WHERE [object_id] = OBJECT_ID('FactContrived') AND

[index_id] = INDEXPROPERTY

(OBJECT_ID('FactContrived'),

'NCI_FactContrived',

'IndexId')

GROUP BY [partition_id];

GO

This query returns the following results:

So as expected, the nonclustered columnstore index is stored within LOB pages (1,050 total).

Next I’ll check the segment metadata for the specific partition_id:

SELECT [column_id], [segment_id], [encoding_type], [row_count], [primary_dictionary_id], [secondary_dictionary_id], [min_data_id], [max_data_id], [on_disk_size] FROM sys.[column_store_segments] WHERE [partition_id] = 72057594039762944;

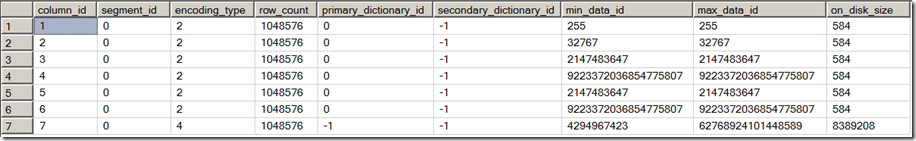

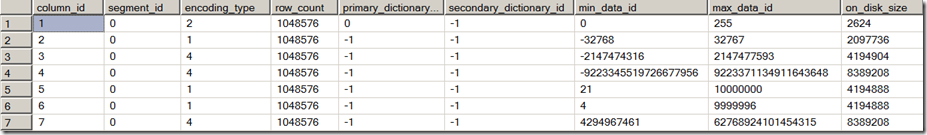

This returns the following 7 rows:

Observations on this output:

- We have 7 columns instead of 6 that are defined for the index. Recall that there is no primary key or unique column for this table – and also look at column_id 7 and its associated on_disk_size and primary_dictionary_id of “-1”.

- Our data was duplicated across each column when we populated it and notice the min_data_id and max_data_id is identical for each column as expected (except column_id 7).

Next I’ll check the dictionary metadata:

SELECT [column_id], [dictionary_id], [type], [entry_count], [on_disk_size] FROM sys.column_store_dictionaries WHERE [partition_id] = 72057594039762944;

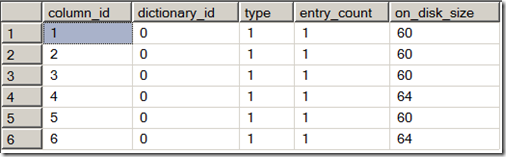

This returns the following 6 rows (no dictionary for our column_id 7):

Notice each dictionary just has one entry (since each column has just one unique value in the table across the 1,048,576 rows). Also notice the very small on_disk_size is bytes – and the dictionary type of “1” which according to BOL is a “Hash dictionary containing int values.”

Now I’ll execute a query that leverages the columnstore index using batch execution mode:

SELECT [col00], MAX([col00]) AS [MaxCol00] FROM [dbo].[FactContrived] GROUP BY [col00] OPTION (RECOMPILE); GO

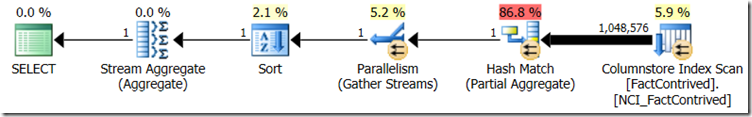

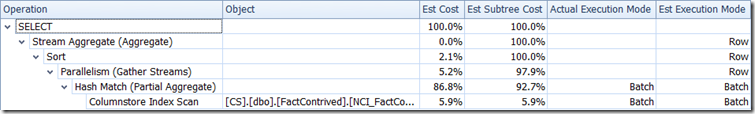

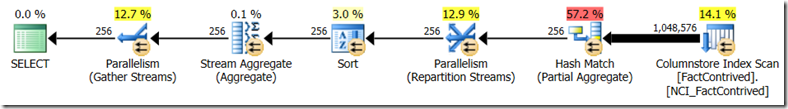

The plan (via SQL Sentry Plan Explorer) is as follows:

The Plan Tree tab shows that batch execution mode was used for the Columnstore Index Scan and the Hash Match (Partial Aggregate) operators:

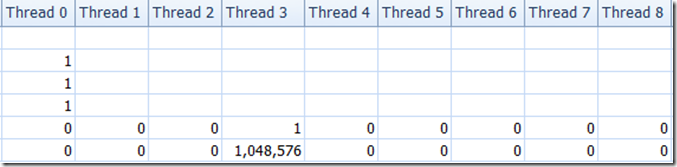

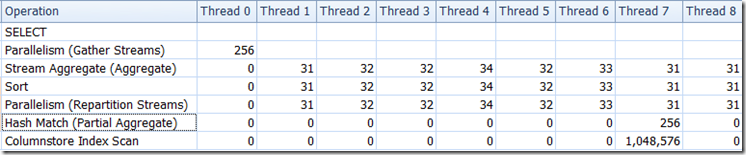

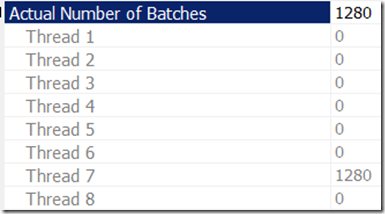

As for thread distribution, while the plan had a degree of parallelism of 8 and and one branch with 8 reserved threads, only one thread has rows associated with it:

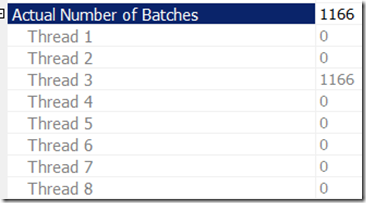

Now regarding the actual number of batches, we see the following:

So with 1,166 batches of rows across 1,048,576 rows, we’re looking at an average batch size of 899.29 rows.

Experimenting with referencing the other columns of this table, we’ll see the same number of batches each time (I’ll spare you the repeated results).

Now when columnstore indexes were first discussed a year or so ago, you would see references to batch sizes of approximately 1,000 rows. For example, the SQL Server Columnstore Index FAQ talks about how a batch “typically represents about 1000 rows of data.” Then a few months after SQL Server 2012 I recall seeing references to it being approximately 900 rows. The results of this particular example show we’re closer to that 900 row value.

Now for contrast – I went ahead and dropped the nonclustered columnstore index, truncated the table, and repopulated it with random values instead of using one unique value per column. Below is a revisiting of the metadata for the new data distributions after adding back a nonclustered columnstore index…

Partition Stats

Notice we have more LOB pages for the random data vs. the uniform data – as expected.

Segment Metadata

Notice the higher on_disk_size and the varying min/max data ranges per segment. Also notice that with the random, more unique data, there only one of the columns (our tinyint column) has a primary_dictionary.

Dictionary Metadata

And we see one dictionary entry for our tinyint column.

Revisiting our original test query, the plan shape changes somewhat (additional Parallel Gather Streams), given that we now actually have 256 rows returned based on the tinyint column:

As for row distribution across threads, we still see one thread handling the batch-mode operations and then a spread of the row-mode rows across the other operators:

Now as for the number of batches, we see the following:

So 1,280 batches over 1,048,576 rows. Averaging 819.2 rows per batch, vs. our previous test’s 899.29 rows.

There is more to explore on this subject – but that’s all for today as I’m about to jump on a plane to Chicago to help deliver IE2: Immersion Event on Performance Tuning.