I’ve been doing a lot of performance tuning work over the last couple of months and this weekend found something that’s very pervasive out in the wild. Kimberly was helping me optimize a gnarly query plan and spotted something in the code I hadn’t noticed that was causing an index scan instead of an index seek. Even though the query plan was using a covering nonclustered index, the way the code was written was causing the index to be scanned.

I’ll set up a test to show you what I mean.

First, create an example sales tracking table and populate it (took 3m45s on my laptop):

-- Create example table

CREATE TABLE [BigTableLotsOfColumns] (

[SalesID] BIGINT IDENTITY,

[SalesDate] DATETIME,

[Descr] VARCHAR (100),

[CustomerID] INT,

[ProductID] INT,

[ModifyDate] DATETIME DEFAULT NULL,

[PayDate] DATETIME DEFAULT NULL,

[Quantity] INT,

[Price] DECIMAL (6,2),

[Discount] INT DEFAULT NULL,

[WarehouseID] INT,

[PickerID] INT,

[ShipperID] INT);

GO

-- Populate table

SET NOCOUNT ON;

DECLARE @Date DATETIME;

DECLARE @Loop INT;

SET @Loop = 1;

SET @Date = '01/01/2010';

-- Insert 1500 sales per day

WHILE @Loop < 547600

BEGIN

INSERT INTO [BigTableLotsOfColumns] (

[SalesDate], [Descr], [CustomerID], [ProductID], [Quantity],

[Price], [WarehouseID], [PickerID], [ShipperID])

VALUES (

@Date,

'A nice order from someone',

CONVERT (INT, (RAND () * 1000)),

CONVERT (INT, (RAND () * 1000)),

CONVERT (INT, (RAND () * 10)),

ROUND (RAND () * 1000, 2),

1,

CONVERT (INT, (RAND () * 4)),

CONVERT (INT, (RAND () * 4)));

IF @Loop % 1500 = 0 SET @Date = @Date + 1;

SET @Loop = @Loop + 1;

END

GO

-- And a clustered index

CREATE CLUSTERED INDEX [IX_BigTableLotsOfColumns_Clustered] ON [BigTableLotsOfColumns] ([SalesID]);

GO

Now I’ve got a simple stored procedure to find the total sales amount for any particular date.

CREATE PROCEDURE [TotalSalesForDate] (@TargetDate DATETIME)

AS

SELECT

SUM ([Quantity] * [Price])

FROM

[BigTableLotsOfColumns]

WHERE DATEDIFF (DAY, [SalesDate], @TargetDate) = 0

OPTION (MAXDOP 1);

GO

The idea is that the stored procedure will pick up all sales for a particular date, no matter what time of that day the sale occurred. I’ve got OPTION (MAXDOP 1) to simulate a system that’s been tuned for OLTP queries (and to make the query plans fit better in my blog post :-)

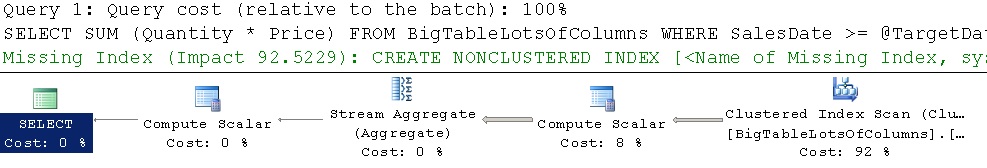

If I run the stored procedure for, say, December 26th 2010, the query plan it uses is as follows:

EXEC TotalSalesForDate '12/26/2010'; GO

And it uses the following CPU and I/O (you can get these by using SET STATISTICS IO ON and SET STATISTICS TIME ON, but be careful – the can generate lots of output for big procs):

Table 'BigTableLotsOfColumns'. Scan count 1, logical reads 7229, physical reads 0, ... SQL Server Execution Times: CPU time = 125 ms, elapsed time = 186 ms.

I ran the proc twice; once to get it to compile, and the second time to factor out the compile time.

Pretty obvious that I can speed this up by creating a covering nonclustered index, and in fact you can see the query processor telling me that with the missing index suggestion. I create the covering nonclustered index:

CREATE NONCLUSTERED INDEX [IX_BigTableLotsOfColumns_Date] ON

[BigTableLotsOfColumns] ([SalesDate]) INCLUDE ([Quantity], [Price]);

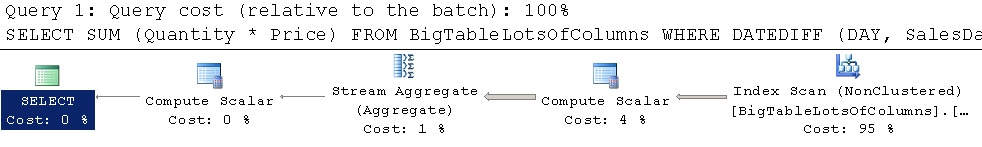

Now when I run the proc, I get a better query plan:

And the following CPU and IO:

Table 'BigTableLotsOfColumns'. Scan count 1, logical reads 2113, physical reads 0, ... SQL Server Execution Times: CPU time = 78 ms, elapsed time = 115 ms.

Much better, as I’d expect. But look at how the nonclustered index is being used: it’s being scanned when instead there should be a seek. It’s still doing more than 2,000 logical reads because it’s scanning the whole index.

The reason for this is that the DATEDIFF in the proc is forcing every SalesDate column value to be evaluated, hence the scan. If I know that the proc only ever is called with a date without a time portion, I can do some rearranging of the logic.

The updated proc is below. Notice that there’s no reason to scan any more as the WHERE clause doesn’t require every column value to passed through the DATEDIFF:

CREATE PROCEDURE [TotalSalesForDate] (@TargetDate DATETIME)

AS

SELECT

SUM ([Quantity] * [Price])

FROM

[BigTableLotsOfColumns]

WHERE [SalesDate] >= @TargetDate AND [SalesDate] < DATEADD (DAY, 1, @TargetDate)

OPTION (MAXDOP 1);

GO

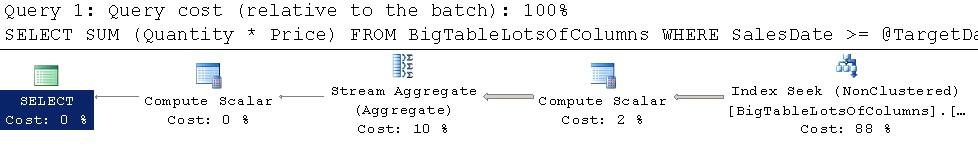

And when I run it I get the best plan:

And the following CPU and I/O:

Table 'BigTableLotsOfColumns'. Scan count 1, logical reads 10, physical reads 0, read-ahead reads 0, ... SQL Server Execution Times: CPU time = 0 ms, elapsed time = 64 ms.

Wow! 200x fewer logical I/Os and not even enough CPU time to register as 1ms.

Summary: although you may have nonclustered indexes that are being used, make sure that they’re being used for seeks as you expect. For small queries that are executed hundreds of times a second, the difference between seeking and scanning can be huge in overall performance.

17 thoughts on “Adventures in query tuning: non-seekable WHERE clause expressions”

Great example. I run into this almost exact situation all the time. Thanks much for the easy fix.

BTW: It only took 1m50s to load the table on my laptop. (And that’s running it with the index already on the table) I LOVE my SSD. That’s also one of my favorite methods of query tuning ;-)

This is my favorite type of slowness to fix, becuase it’s a dead-simple solution that brings /huge/ performance gains in a lot of instances. Really makes one feel like they know what they’re doing ;-)

Yes, isolating a column to one side of the expression can make a huge difference in the performance of a query. When a column is not isolated (and just for the record left/right is irrelevant) then SQL Server has to perform the operation row-by-row which doesn’t allow the index to be used for seeking. If a covering index does not exist (even if the expression is VERY selective) then it’s likely that SQL Server will do a table scan. In this case the database had a covering index and the performance was better… but the problem was really in the expression.

My general course of action is NOT to cover when it’s highly selective – any index will do! But, if you’re covering and you’re expecting the query to be seekable, make sure you have a good reason for why. Try rewriting those expressions to isolate the column.

Have fun!!

kt

I just saw this issue Friday! It’s basic advice to be honest, but like you said, it’s out there in the wild. Thanks for getting the word out.

Also, you need to understand not all functions are deterministic like getdate() that evaluates to a constant at execution time.

This is similar to one of my standard interview questions BTW :)

Ah yes, SARGable WHERE clauses……same here, changing this

WHERE YEAR(payment_dt) = YEAR(GETDATE())

and MONTH(payment_dt) = MONTH(GETDATE())

to this

WHERE payment_dt >= DATEADD(mm, DATEDIFF(mm, 0, GETDATE())+0, 0)

and payment_dt < DATEADD(mm, DATEDIFF(mm, 0, GETDATE())+1, 0)

made the query run time go from over 24 hours to 26 seconds!!!!

See here http://blogs.lessthandot.com/index.php/DataMgmt/DataDesign/only-in-a-database-can-you-get-1000-impr

Another one is changing

LEFT(Column,1) = ‘S’

to

Column LIKE ‘S%’

First one is not SARGable

As a matter of fact, "you’re getting a scan because your WHERE is non-SARGable" is the second most common problem I observe. (I then explain what I mean, of course. :-))

The most common remains, of course, "you didn’t create an index".

Only at third place does the actual table/query design come in.

Indeed- that’s why I blogged about it when I saw it have such a huge effect on this customer’s proc.

Thanks for a clear explanation – this is something that all new DBAs and Developers should be shown! For instance, when you’re new it’s not blindingly obvious that a Seek is better than a Scan, as both indicate that SQL is having to look through the index. I haver a number of queries which search on an indexed order date field and I think I need to go and check what they’re doing :-)

It’s a great explanation. It mirrors something else I read recently. But I looked at the original query for the proc and thought, "Who would use a datediff for this sort of thing?"

And now I’m thinking about it…Yeah. My devs would do this sort of thing.

Hi Paul,

thanks for a very nice example!

Still (hm, sorry, it’s a kind of a stupid question..): how do I get the message/hint (the green one :-) ) saying, that the index is missing (in your example: missing index (Impact..): Create nonclustered index….)?

Thanks for your help

Andreas

You need to be on 2008 for that to happen.

Great stuff. Another good one I found and fixed the other night:

WHERE CAST(Col1 as BIGINT) = @Val

Immediate scan. Every time.

This one is much more difficult to find, but has the same problem. Check ‘table scan’ execution plan on select * statement:

create table t(

id varchar(4),

name varchar(50)

)

set nocount on

declare @i int

set @i = 1

while @i < 1000

begin

insert into t values (@i, ‘test’)

set @i = @i + 1

end

create index idx01 on t(id)

select * from t where id = 10

drop table t

Yeah – implicit conversions are hard to spot without a script like the one Jonathan Kehayias has on his blog

Some stuff of mine on sargability:

Video at: http://sqlbits.com/Sessions/Event7/Understanding_SARGability_to_make_your_queries_run_faster

One of several blog posts at: http://msmvps.com/blogs/robfarley/archive/2010/01/22/sargable-functions-in-sql-server.aspx

Connect Item at: http://connect.microsoft.com/SQLServer/feedback/details/526431/make-more-functions-sargable

Rob

Great Help Paul !!!! Thanks