I was recently working on a client system that has problems running DBCC CHECKDB normally due to the concurrent volume of data changes occurring against their 2TB OLTP database. The challenge with running DBCC CHECKDB on this system is that the database snapshot (which DBCC CHECKDB creates) quickly exceeds the sparse file limits in Windows and their check fails with error 665.

To work around this I wrote a custom procedure for them that breaks down the DBCC CHECKDB checks into individual checks and spreads the process over a period of roughly two weeks, within a 2-3 hour window each morning when the system has the least amount of other activity.

While I was working on changes to this custom script I noticed that DBCC CHECKTABLE was taking a ~96GB execution memory grant during its execution. The server is a Dell R720 with 2 x Intel E5-2690 processors (2.9GHz 8 cores and HT is enabled so 16 logical cores per socket) and 512GB of RAM. I mentioned this to Paul and it became something that we wanted to investigate further.

We have a similar R720 with a multiple Fusion-IO PCI-X SSDs installed in it as a test server, but it only has 64GB RAM. I had already setup a 500GB test database for Paul’s DBCC CHECKDB performance tests, using AdventureWorks and my scripts to enlarge it by adding new objects and creating a number of enlarged versions of the SalesOrder* tables in SQL Server 2012 SP1 with CU3, so when Paul finished his tests, I decided to take a deeper look at how DBCC CHECKDB uses execution memory in SQL Server.

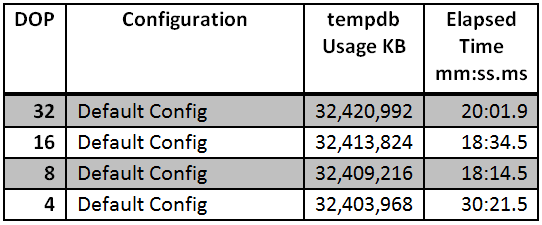

The SQL instance was configured with ‘max server memory’ at 54000 which leaves roughly 4GB of available memory on the server at all times. I then wrote a test harness to perform DBCC CHECKDB with ‘max degree of parallelism’ set a 32, 16, 8, and 4 that logged the start and end time of each test, the tempdb usage for each test, and the deltas for wait, latch, and spinlock stats for the each test. Under the default configuration, DBCC CHECKDB acquired a 10GB memory grant from the instance. I ran the test harness through four iterations of tests and had the following averages:

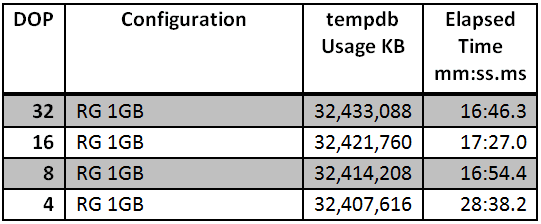

I then configured Resource Governor on the instance and created a Resource Pool that had MAX_MEMORY set at 10% and a Workload Group in the pool with REQUEST_MAX_MEMORY_GRANT_PERCENT set at 25% which yields a ~1GB maximum execution grant size for sessions assigned to the group.

CREATE RESOURCE POOL [Maint]

WITH (min_cpu_percent=0,

max_cpu_percent=100,

min_memory_percent=0,

max_memory_percent=10,

cap_cpu_percent=100,

AFFINITY SCHEDULER = AUTO);

GO

CREATE WORKLOAD GROUP [wg_Maint]

WITH (group_max_requests=0,

importance=Medium,

request_max_cpu_time_sec=0,

request_max_memory_grant_percent=25,

request_memory_grant_timeout_sec=0,

max_dop=0)

USING [Maint];

GO

Next I created a dbcc_user login on the server, specifically for performing DBCC CHECKDB, added it to the sysadmin server role, and created a classifier function to place connections from this login into the wg_Maint group for testing the effects of reducing the memory grant for DBCC CHECKDB.

USE [master];

GO

CREATE LOGIN [dbcc_user] WITH PASSWORD=N'R3@lly$tr0ngP@$$w0rd!', DEFAULT_DATABASE=[master], CHECK_EXPIRATION=OFF, CHECK_POLICY=OFF;

GO

ALTER SERVER ROLE [sysadmin] ADD MEMBER [dbcc_user];

GO

--- Create the classifier function

CREATE FUNCTION fnRGClassifier()

RETURNS SYSNAME

WITH SCHEMABINDING

AS

BEGIN

DECLARE @group SYSNAME;

IF(SUSER_NAME() = 'dbcc_user')

BEGIN

SET @group = N'wg_Maint';

END

--- Use the default workload group if there is no match on the lookup.

ELSE

BEGIN

SET @group = N'default';

END

RETURN @group;

END

GO

--- Reconfigure the Resource Governor to use the new function

ALTER RESOURCE GOVERNOR with (CLASSIFIER_FUNCTION = dbo.fnRGClassifier);

ALTER RESOURCE GOVERNOR RECONFIGURE;

GO

Next I changed the test harness to login as the dbcc_user and reran the series of tests an additional four times with the following averages for the results of each test:

Reviewing the data, you will see that the elapsed time decreased for all of the tests, while the tempdb usage did not increase significantly as a result of running with only 1GB of execution memory granted. This reduction in execution memory allowed the remaining 9GB to be used by the data cache for database pages during the tests, which was confirmed by capturing the Memory Manager and Buffer Manager performance counters for the instance during the testing.

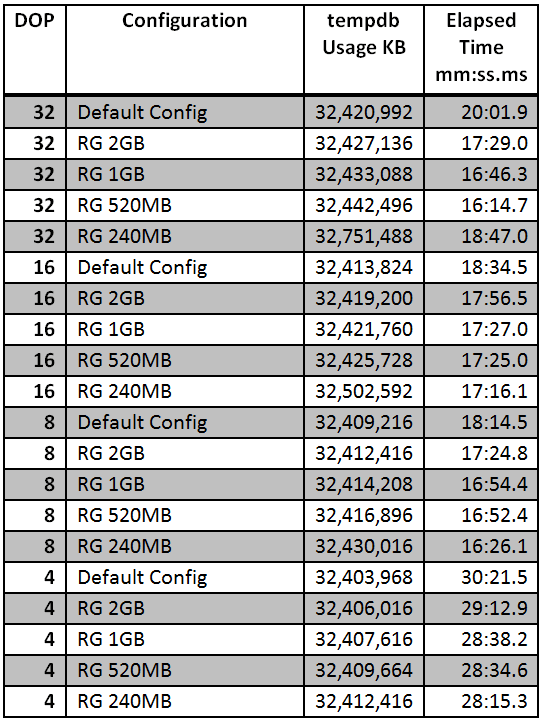

I then decided to see what different memory effects had and ran the tests at 2GB, 520MB and 240MB by changing the Workload Group REQUEST_MAX_MEMORY_GRANT_PERCENT to values of 50%, 13%, and 6% respectively. Using the same series of tests for additional times per configuration. The following averages were observed after all of the tests were completed:

From the results, the only time that tempdb usage increased, was for the 240MB execution grant size and then only for the DOP 32 and DOP 16 tests. The total increase in tempdb usage was roughly 300MB in the worst case, which is considerably smaller than the gains in data cache space savings by reducing the execution memory grant size for DBCC CHECKDB. The fastest average execution time for this specific database was obtained by using 520MB for all of the levels of DOP that were tested.

After completing this testing, Paul and I spent an hour on a call reviewing the spinlock stats, the wait stats, and the latch stats for each of the tests to try to identify why performance improved by reducing the execution memory grant with Resource Governor like this. The only thing that pointed to an explanation for the improvement in performance is the higher amount of memory available for database pages as a result of reducing the execution memory grant.

Additionally, using Extended Events, I attempted to collect the query_post_execution_showplan events for the executions under the default configuration and under the constrained Resource Governor configuration. It only received a few parts of the actual execution plan information, but the plans returned were identical with the exception of the memory grant information for RequestedMemory, which matched the limitations that were in place for the Resource Governor tests.

The most interesting item from the execution plan however, was the value for the desired memory, which was 45,182,976,776 KB, or roughly 43TB. I don’t have an answer, yet, to why this occurs, but I hope to be able to figure it out at some point. It appears that the costing model for DBCC CHECKDB has limitations that weren’t apparent years ago when servers didn’t have the levels of memory available in today’s servers. Even doing a DBCC CHECKDB against the master database, which is 5MB in size on this instance, requests a ~2GB memory grant for execution, so it really seems to point to an excessive costing algorithm for DBCC CHECKDB inside of SQL Server.

(From Paul: the way that costing is performed for DBCC CHECKDB is based on the expected number of bits of information DBCC CHECKDB will create in the rowset it gives to the query processor to sort, hash, and give back to DBCC again. This cardinality estimation method has been the same since SQL Server 2000.)

In summary, I’ll be reviewing our clients’ systems and implementing the Resource Governor method I described above on systems where it will make a difference to performance by not taking so much of the buffer pool. I suggest you consider doing this too.

24 thoughts on “DBCC CHECKDB Execution Memory Grants – Not Quite What You Expect”

everything about this post is awesome. even though i have never had (and likely will never see) the issue which prompted it, i really like how it was noticing one detail (“DBCC CHECKTABLE was taking a ~96GB execution memory grant during its execution”) during the tweaking of the workaround which led to both a.) eventual knowledge of the real problem and 2.) a kickass solution (2-3 weeks -> <20 minutes!). i love your description of getting there, and finally seeing a valid use-case for resource pools/workload groups to solve the hell out of an actual, real-world problem (not saying there aren't any, i've just never seen one).

finally, the sheer thrill of the process came across loud and clear. i got a vicarious Eureka! out of it, anyway. thanks for sharing the experience, analysis, and knowledge.

Very interesting.

Thanks for the great article. I’ve just run a DBCC CHECKTABLE on my system and see the Granted Workspace Memory (KB) counter hit 8,493,016 (so that’s about 8.5GB). The Buffer Manager reserved pages counter hits 1,061,852 so that equates to 8.5GB as well. Along with the OLEDB waits that occur during CHECKDB, I’m adding these two perfmon counters to my daily collection. It’s great that we can now account for the rise. I’ll be implementing the resource governor approach to one of my 24×7 OLTP systems to reduce the memory footprint. I’ll look forward to any follow-up content you may have for this great work.

Fantastic. Thanks for this. I inherited a huge database that’s had to checkdb performed because of size and limited window. How did you get the default configuration results? and can you provide the script?

Well, thanks for blogging about this as the Customer Environment’s I am supporting currently sport 1TB of physical memory and we ran a quick test DBCC CheckTable against a 15GB Table and low and behold approximately 110GB memory grant acquired so who knows what would be case when running against the 150-300GB tables. Very useful and now implementing resource governor as you documented. Thanks again for the keen eyes spotting this.

I recently ran into the same issue when we added 256GB to an existing 256GB. I was able to successfully implement this user and resource governor and test this in a job. The problem is that when you make this user a sysadmin, and then make it the owner of the job… The job runs under the context of the agent account. Do you see any easy way around this? Thanks in advance.

Jared

Jonathan,

Thanks for the great article and methodical testing approach. (That must have been time-consuming).

A couple of questions:

1) You mentioned some #’s for “Desired” and “Granted” memory (whatever methods you used to see those), but did you happen to notice what the “used_memory_kb” and “max_used_memory_kb” fields in the sys.dm_exec_query_memory_grants DMV were? I have seen big memory grants for CHECKDB before but never bothered to see how much it was actually using (assuming that DMV is relevant for memory grants given to CHECKDB at all). I’ll have to take a look next time I’m able. Your test results seem to indicate that we would see “max_used_memory_kb” be just a fraction of the overall grant.

2) I’m a bit confused by CHECKDB’s use of both memory and TempDB. For a normal query, e.g. a sort, we won’t get TempDB utilization for the sort at all unless memory is exhausted and the query spills. However, your above #’s seem to say that CHECKDB might get a massive memory grant (more than it needs), but it will still use TempDB space. So this doesn’t sound like a “spill” to me; does CHECKDB use those 2 resources for 2 different things? Curious to know more…

Thanks!

Aaron

Hey Aaron,

For question 1, the actual memory usage was only a small portion of the actual grant size. For question 2, you should read Paul’s blog post http://www.sqlskills.com/blogs/paul/how-does-dbcc-checkdb-with-estimateonly-work/ which talks about how CHECKDB uses tempdb for fact storage and aggregation during analysis. It’s not just simply sort or hash worktables being used as a part of the checking, so it is different than a simple query with a sort or hash memory grant.

Since writing this blog post there have been a number of additional validations of the findings in this blog post. The memory grant calculations for DBCC CHECKDB are significantly larger than they should be and minimizing them with Resource Governor results in better performance for large memory systems.

Jon,

Thanks for responding! The TempDB utilization for CHECKDB make sense to me… generating facts on a large DB, even in batches, will clearly create a large TempDB table.

My original confusion was why CHECKDB would also need a memory grant on top of the TempDB utilization (since they are 2 separate resources, and the fact data would go into the temp table). A little more reading leads me to think that the memory grant is needed to satisfy the Blob Eater query that Paul talks about here (http://www.sqlskills.com/blogs/paul/checkdb-internals-what-is-the-blobeater/). The query groups, sorts, and has a memory address reference, so obviously it needs to be given memory to handle these operations.

Anyways, whatever the usage(s) for memory, it sounds like RG is the documented way that we have to address the problem. I’ll look into how feasible that would be for our environments.

thanks,

Aaron

Thanks!

Thanks so much for this post. We were seeing a big increase in the Wait Stats for our Server during our weekly DBCCs and I suspect it was due to the lower available memory. Since we Restore our DB from COPY_ONLY to a secondary server on a weekly basis I thought we could do the Logical checks there (something I concluded from reading Aaron’s article where he showed logical checks taking 90% of the Checkdb time).

My questions are

1- Is it still worth splitting it up?

2- Will the Physical only checks still be so memory hungry (suggesting I still need to implement an RG pool just for that and

3- Have you since found a way for the classifier to work say by something like the Sql Text in the batch instead of the UserID?

Thanks Again

…Ray

Hey Ray,

Whether it is worth splitting up depends on a lot of factors that I don’t know about your database and I’m not sure about PHYSICAL_ONLY memory grants off hand, I’d have to go test it and see what it requests. The classifier function is limited to a subset of system functions (http://technet.microsoft.com/en-us/library/bb933865(v=sql.105).aspx) and is fired during the login attempt, so things like sql statement text are not yet available, the request hasn’t been sent to SQL Server during the login processing where the classifier function is actually being evaluated. So you have to build it on things that are available during login processing like the app name, login name, connection property, etc.

Regarding your comment ‘The memory grant calculations for DBCC CHECKDB are significantly larger than they should be’ – this would indicate a design flaw/bug. Rather than have the industry work around this problem, wouldn’t it be better to have it fixed at the source? If Microsoft Premier Support found an implementation of an application like this they would advise the application vendor to fix the problem. Surely this is not ‘by design’.

Hey Brett,

I’ve raised the issue with Microsoft, and so have others that I’ve emailed with since this post went live. This is simply showing how to work around the issue, and I don’t know that there is a fix forthcoming to the memory grant issue, but if you feel it should be fixed, you can also open a case with Microsoft for your specific request.

We had the problem that our weekly dbcc checkdb was causing the Page Life Expectancy to drop to zero.

I implemented the resource governor configuration for running dbcc checkdb and it had a serious side effect.

We monitor our production systems with foglight and it showed that during the week the PLE never went over 20K seconds. I removed the resource governor the PLE has continued to rise since the change and is now over 275K seconds.

Any explanations?

Hey Yitzchak,

I would suspect that something was wrong with the way your RG configuration was setup, but without being able to see the configuration and performance information from the system I can’t say for sure. If you’d like help with looking at this, email request@sqlskills.com and we could setup a consulting engagement to help you further. I can tell you that I’ve configured this on many systems, and many other people have also configured this based on comments and Twitter conversations, and haven’t seen the behavior that you are describing above.

Jon,

I know you guys go over this in the IE courses and the blog post is great. I just got around to really implementing this in my environment and I’m knocking lots of time off the integrity checks we do. Just wanted to say thanks again.

-Ryan

Love this article! Have had to restrict max memory grant for lots of queries, and planning to do it for DBCC CHECKDB when its underperforming also.

But based on some work I’m doing now, I’ve got to play Columbo 🙂

“After completing this testing, Paul and I spent an hour on a call reviewing the spinlock stats, the wait stats, and the latch stats for each of the tests to try to identify why performance improved by reducing the execution memory grant with Resource Governor like this. The only thing that pointed to an explanation for the improvement in performance is the higher amount of memory available for database pages as a result of reducing the execution memory grant.”

Yeah. That sounds pretty good. But just one more thing… doesn’t checkdb disfavour its reads , minimizing buffer pool effect? At the same time that prevents a “polluted” buffer pool from adversely effecting other query performance, I’d expect roughly the same behavior for checkdb whether 7 GB or 9 GB of database cache. Maybe the allocation pages read by checkdb are not disfavored? That could definitely help, but I’d still expect minimal difference in the number of allocation pages retained in a 7 GB vs 9 GB database cache. Could be verifiedby comparing the physical read bytes against the database files in the test runs – if larger bpool database cache is the relevant difference, fewer bytes should be read from the database files as they were accessed from cache.

Ever larger tables and indexes mean ever larger aggregate size of checkdb batches. The amounts of RAM given to SQL Server keep growing ever larger also. I wonder if at some point a regression to the issue addressed by traceflag 2566 in kb945770 is triggered (http://support.microsoft.com/kb/945770). Since I’ll need to enable trace flag 2566 in some cases to address kb2888996 (http://support.microsoft.com/kb/2888996) anyways, I’ll give this a shot next time I find an unexpectedly and unexplainedly crawling checkdb.

Chances are you no longer need TF2566 :

kb2888996 (http://support.microsoft.com/kb/2888996) now states:

This issue was first fixed in the following cumulative update of SQL Server.

Cumulative Update 1 for SQL Server 2014

Cumulative Update 8 for SQL Server 2012 SP1

Cumulative Update 10 for SQL Server 2008 R2 SP2

Maybe worth the test to apply the needed CU

I am having with DBCC running slow in my SQL Server 2016 environment, 12 procs 64 gb memory on Windows Server 2012 R2 – the database in question is @ 86gb, in my SQL Server 2012 environment DBCC runs in 4 minutes, same database in SQL Server 2016 it runs in 12 minutes. Opened call with MSFT and have been on this for 1 month now, we have reinstalled and today built a brand new server and still nothing. I applied this workaround and it finished in 10 minutes. This tells me that DBCC right now is running @ 200/300 % , which I know it can’t be. Thoughts and ideas greatly appreciated.

environment is virtual on VMWare

There are dozens of other things that could play into CHECKDB performance in that environment. Without being able to fully review the two systems I would only be guessing. Being a VM on VMware it could be host hardware differences, overcommitment of one host vs the other, different settings for the VM’s, or any of a number of items that affect performance.

Great article Jonathan!

I have the same issues on a couple of the 1TB RAM servers (both SQL 2008R2 and SQL 2012) where the DBCC’s end up taking up too much buffer pool. I am testing your resource governor approach to limit the amount of memory that DBCC CHECKDB takes. On my test server (SQL 2008R2) with only 16GB of RAM (8GB allocated to SQL), I ran some queries to completely fill the buffer pool with data from one database (6.7GB verified by looking at sys.dm_os_buffer_descriptors), then I ran a DBCC CHECKDB against that database. While the DBCC was running, SQL kept the 6.7GB of this database’s pages in the buffer pool and the granted memory for the DBCC was 1.5MB.

But, when the DBCC completed, the buffer pool usage for this database was reduced to 59MB.

I created all the governor objects, re-filled the buffer pool with pages from the test database and verified that the governor did indeed reduce the amount of granted memory for the DBCC running as my test user – from the original 1.5GB down to 60MB, and it did the exact same thing – buffer pool mostly cleared when the dbcc completed.

My last test was to fill the buffer pool fairly equally with pages from three databases, with my test database using 1.5GB of the buffer pool. I reran the DBCC CHECKDB and verified that my session was using the restricted resource governor pool and verified that it’s granted memory was 60MB. While the CHECKDB was running, my test database went from using 1.5GB of buffer pool to nearly 6GB, then again, when the the DBCC was complete, most of that buffer pool was released.

Maybe I’m just not understanding what the resource governor fix was supposed to do – I was expecting (and hoping) to restrict the amount of buffer pool that checkdb would use so that when it was done, the database would have retained most of its pages in the buffer pool.

I haven’t tested this yet on a test SQL2012 or later server – maybe it’s a “feature” of SQL 2008R2.

Any insights you can share?

Resource Governor only controls the query execution memory grant, not the amount of buffer pool space a check can use from page reading. What you’re seeing is that DBCC CHECKDB doesn’t do traditional buffer pool disfavoring, so the page reads for the database snapshot are causing other pages to be pushed out of the buffer pool. If you look at the database IDs as well as the database name, you’ll see that the pages are being used by the database snapshot, then the snapshot is dropped and the buffer pool cleared out.

Perfect – thanks for the explanation!