Properly configuring SQL Server is an important factor in having a stable environment that performs well. While there is a lot of good guidance available for how to best configure SQL Server, the specifics of any given implementation is a very big “It Depends…” and one of the things that we do in our Immersion Events for SQL Server is teach you why it depends so that you make the appropriate decisions about your environments configuration. Last week, a Microsoft employee published on the MSDN Blogs site a calculator to determine where you should set ‘max server memory’ (http://blogs.msdn.com/b/sqlsakthi/archive/2012/05/19/cool-now-we-have-a-calculator-for-finding-out-a-max-server-memory-value.aspx) for your instance of SQL Server. While the intent was good, the outcome is incorrect and will most likely lead to problems if implemented.

Note: There have been edits to the above mentioned post since this was first published that state it is not an official Microsoft recommendation and that it is targeted at 100 level users. However, if the goal is to keep 100 level admins from having problems, a much more conservative set of values would have been used for where 'max server memory' should have been set to ensure that they didn't have memory pressure issues for their configuration. Read the rest of this post to see why I say this.

How to appropriately configure ‘max server memory’ has been something I’ve blogged and talked about repeatedly over the last few years because it is important to proper server performance and stability on 64-bit platforms. My most recent post, How much memory does my SQL Server actually need?, offered two methods of being able to try and calculate a good value for this based on the system configuration, with one being a bit more reserved than the other. My general recommendation is to use the calculation from my book, Troubleshooting SQL Server: A Guide for the Accidental DBA, which is to reserve 1 GB of RAM for the OS, 1 GB for each 4 GB of RAM installed from 4–16 GB, and then 1 GB for every 8 GB RAM installed above 16 GB RAM. This isn’t an overly technical calculation but it has worked well and is generally going to configure ‘max server memory’ low enough that the server will be stable and have reliable performance. However, this doesn’t necessarily account for everything that might be going on in a specific environment, for example if you have a FusionIO card installed, there is a memory requirement associated with the drivers for the SSD and the memory requirements can be quite significant depending on the size of your SSD and the block size you are using (http://www.sqlservercentral.com/articles/SSD+Disks/69693/). HBA drivers can also be large memory consumers in the environment, and these requirements could result in memory pressure if you don’t properly account for them in memory configuration.

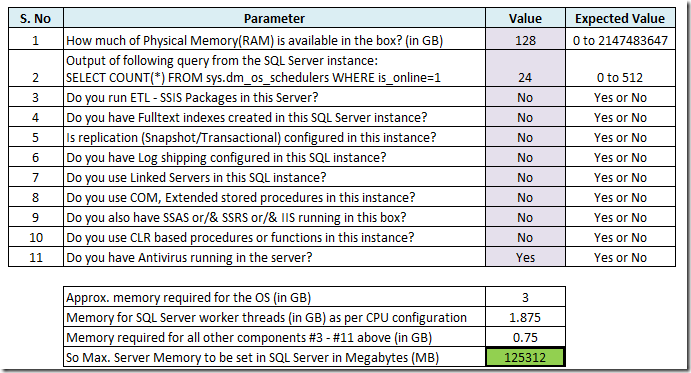

There’s lots of bad advice out there about how to set ‘max server memory’ for a given instance, so you might be wondering why would I point this one out specifically? It’s really simple, because this person represents Microsoft, and that means people immediately follow the recommendations even when they are wrong. If we take a look at the the online calculator that was published on this blog and use an example SQL Server with 24 cores, and 128GB RAM installed with nothing but corporate anti-virus running on it per the online calculator, the recommended ‘max server memory’ value is 122.375GB, which is going to be too high from practical experience working with dedicated SQL Servers the last 8 years.

If there is anyone out there running a SQL Server with 128GB RAM installed and a ‘max server memory’ value this high, I can almost guarantee that you are hitting memory pressure issues in the Windows OS, and a quick peak at your ring buffer memory notifications in dm_os_ring_buffers would prove it.

The blog post that introduced the tool doesn’t provide any information specifically about how to tell if you’ve set ‘max server memory’ too high, and what signs to look for to consider lowering the value that has been set, it only covers items that tell you SQL Server is in memory pressure and could use more memory. As I mentioned in my Lock Pages in Memory article, SQL Server has a built-in component of the SQLOS, the Resource Monitor, that monitors the QueryMemoryResourceNotification Windows Server API to get status about the OS level memory availability on the server. If the Windows OS is under memory pressure, it will set a low memory notification that the Resource Monitor thread will detect and force the external clock hands on the caches internally to begin sweeps of the caches to cleanup and reduce memory usage allowing the process to free memory back to the OS. You can see this happening on a server where ‘max server memory’ has been set too high by running the following query:

–System Memory Usage

SELECT

EventTime,

record.value('(/Record/ResourceMonitor/Notification)[1]', 'varchar(max)') as [Type],

record.value('(/Record/MemoryRecord/AvailablePhysicalMemory)[1]', 'bigint') AS [Avail Phys Mem, Kb],

record.value('(/Record/MemoryRecord/AvailableVirtualAddressSpace)[1]', 'bigint') AS [Avail VAS, Kb]

FROM (

SELECT

DATEADD (ss, (-1 * ((cpu_ticks / CONVERT (float, ( cpu_ticks / ms_ticks ))) – [timestamp])/1000), GETDATE()) AS EventTime,

CONVERT (xml, record) AS record

FROM sys.dm_os_ring_buffers

CROSS JOIN sys.dm_os_sys_info

WHERE ring_buffer_type = 'RING_BUFFER_RESOURCE_MONITOR') AS tab

ORDER BY EventTime DESC

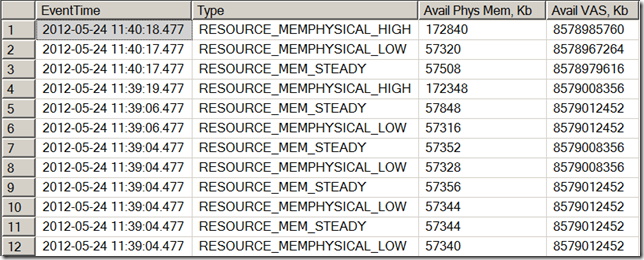

The output of the above script on a server that has been set incorrectly can be seen below:

The SQLOS responded to the low memory condition in the OS by reducing it’s memory usage, and then the Windows OS changed it’s notification from steady, too high memory, which signals the SQLOS that it can once again grow it’s memory usage, which resulted in the OS setting a low memory notification once again, and the process repeats. If you follow the recommended settings from the calculator that was posted, this exact scenario will almost certainly result.

The take away from this post, don't blindly follow information or use tools that have been posted online, even when they are from someone at Microsoft. As Paul says, there is nothing black and white with SQL Server, everything is an "It Depends…" (except for AutoShrink, that should NEVER be turned on!). When you are configuring a SQL Server initially, it is best to start off with a lower 'max server memory' and then monitor the server over time to see it's memory usage trends. If the server has available memory, the Available MBytes counter will show the extra gigabytes of memory that could be used and you can slowly adjust up based on what is available, leaving at least 150-300MB (I specify a range because it depends, but I stick to the high side of 300MB+ available at all times) available to the OS at all times.

EDIT:

After posting this, some people asked me by DM on twitter and by email, about the MAXDOP calculator that was on the same blog site (http://blogs.msdn.com/b/sqlsakthi/p/maxdop-calculator-sqlserver.aspx). I intentionally didn't blog about this one originally, because it doesn't make the same type of problemattic recommendations as the 'max server memory' calculator. If you plug in your processor information, it will make recommendations that have been in the support KB's for years. However, newer processor architectures don't necessarily fit the recommendations that were made back in 2009 when the referenced KB article was written, and some of the changes recommended by the calculator have absolutely zero effect on the server. For example, if you plug in 0 NUMA nodes and 4 CPU cores, it recommends MAXDOP of 4, that is what the 0 essentially correlates to anyway, so what's the purpose in changing the default from 0? It also doesn't mention or take into account workload as a part of its recommendations and a MAXDOP of 8 on a 32 way server that is doing 1-2 data warehouse queries an hour that chunk through large amounts of data is a waste of the remaining resources on the server. Just like 'max server memory' the correct value for MAXDOP is environment and workload specific.

26 thoughts on “Wow… An online calculator to misconfigure your SQL Server memory!”

I think the most important consideration when configuring MaxServerMemory is that it cannot be set & forgotten, it requires review. I agree your algorithm is better than the one published on the MS blog but I think your following comments about other environmental considerations (SSDs, HBAs & also ETL, other SQL instances etc) are more important than the algorithm itself. External consumers are a big part of the picture & given that their memory consumption can change (sometimes wildly) the MaxServerMemory / MinServerMemory settings should be reviewed at least occassionally.

Jon – Great post. I think one thing that people consistently forget is that SQL Server is a multi-purpose RDBMS and as such – can do absolutely anything. This is the power of SQL Server. The problem is that with unlimited possibilities come a myriad of options/settings/configurations and therefore [unfortunately] confusion. All too often people are looking for a single simple solution and sometimes it exists (e.g. autoshrink should NEVER be turned on) but for many things the correct answer is IT DEPENDS. This is not an escape; it’s only the beginning. Articles like this are around to help people resolve [b]what it depends ON[/b]!

Nicely done. Great references.

Cheers,

kt

Hey Greg,

I completely agree that this stuff is not a set it and forget it configuration. The calculation I wrote in my book was something I fought against providing with SimpleTalk because there is no one size fits all answer to max server memory, but after discussing things with Gail and Tony, that worked out to be the calculation that we felt would be most likely to fit a majority of environments and be restricted low enough to maximize memory usage initially without the OS being under memory pressure. However, considerations like SSDs, HBAs, etc, aren’t generally going to fit into that calculation, so my goal in the book was to teach the underlying importance of sizing the environment memory usage and basing max server memory on that. The old teach a man to fish and you’ll feed him a lifetime addage.

Great post. You put more effort into this than the original author put into the calculator. I left comments on his post when it came out, and he didn’t bother to approve my comments – go figure. Revisionist history.

Looks like the problem is with HighMemoryResourceNotification.

HighMemoryResourceNotification is signaled when there is only 170MB of available RAM (out of 128 GB)? That is strange. Hope MemoryThreshold registry is not tweaked. I will look at this behavior and see why we signal HighMemoryResourceNotification sometimes next week.

Hey Karthick,

This is not strange at all, it is how the Windows Memory Management has worked for a long time. From the Windows Internals book by David Solomon and Mark Russinovich, "The default level of available memory that signals a low-memory-resource notification event is approximately 32 MB per 4 GB, to a maximum of 64 MB. The default level that signals a high-memory-resource notification event is three times the default low-memory value." There are registry keys to change these values, but there is a reason you don’t see a recommendation to change those in any of the blogs, Books Online, or whitepapers for SQL Server. If you set ‘max server memory’ correctly Windows Memory Management never has to set a LowMemoryResourceNotification to begin with.

I was excited when I came upon this post, thinking that I could finally have some objective measure to tell whether too much memory had been allocated to SQL Server. I ran your query on one group of servers, and the results seemed generally consistent with my intuitions.

But then I ran it on a some slightly larger instances running on larger servers, and I found one case that just didn’t make sense to me. I find there are 256 rows returned in just the last 6 hours, and the lowest value for Available Physical Memory was 93,002,088 Kb. Yes, you’re reading that right — it’s a server with 144 GB RAM, and we typically have close to 89 GB available (adding “Standby” and “Free” as reported by Resource Monitor). With so much memory available, why would the OS be telling SQL Server to not grow its memory usage?

More details: the server is running Windows 2008 R2 Enterprise Edition, SP1, with SQL Server 2008 R2 Enterprise Edition SP2 CU5 (10.50.4276), and it has so much free RAM in order to support another instance under a failover condition.

Here are some recent data points:

2013-08-16 16:49:56.767 RESOURCE_MEMPHYSICAL_LOW 93200400 8430859444

2013-08-16 16:49:56.767 RESOURCE_MEM_STEADY 93200404 8430859956

2013-08-16 16:49:56.767 RESOURCE_MEMPHYSICAL_HIGH 93200404 8430859956

2013-08-16 16:49:56.767 RESOURCE_MEMPHYSICAL_LOW 93200400 8430859444

2013-08-16 16:49:56.767 RESOURCE_MEM_STEADY 93200404 8430859956

2013-08-16 16:49:56.767 RESOURCE_MEMPHYSICAL_HIGH 93200404 8430859956

2013-08-16 16:39:42.767 RESOURCE_MEMPHYSICAL_LOW 93190604 8430860616

2013-08-16 16:39:42.767 RESOURCE_MEM_STEADY 93190672 8430861384

2013-08-16 16:39:42.767 RESOURCE_MEMPHYSICAL_HIGH 93190672 8430861384

2013-08-16 16:39:42.767 RESOURCE_MEMPHYSICAL_LOW 93190604 8430860616

2013-08-16 16:39:42.767 RESOURCE_MEM_STEADY 93190672 8430861384

2013-08-16 16:39:42.767 RESOURCE_MEMPHYSICAL_HIGH 93190672 8430861384

I’d really like to understand how to interpret this information from the ring buffer, so I hope you can help me out. Thanks.

I found that your next post (https://www.sqlskills.com/blogs/jonathan/identifying-external-memory-pressure-with-dm_os_ring_buffers-and-ring_buffer_resource_monitor/) sheds quite a bit of light on my questions above. Thanks for your efforts to share what you’re learning.

Hi great reading, is there any reason to have “min memory” setting to anything else then 0, on dedicated servers we are using same numbers on “min” and “max”!

Hey Leif,

If the server is dedicated to SQL Server and has ‘max server memory’ set to prevent memory pressure, there is no benefit from setting ‘min server memory’ equal to ‘max server memory’ and at the same time there is not really a problem with doing it either. The ‘min server memory’ setting only takes effect after the buffer pool has ramped up and when Windows signals memory pressure and SQLOS responds by shrinking the buffer pool memory usage. When ‘min server memory’ has a value other than 0, that sets the limit to how far down SQLOS will shrink under pressure, which is not likely to happen on a dedicated box for SQL Server.

I am pretty sure I know the answer to this but I want to ask the pros. Example Scenario: I have a default instance “DB01” and one named instance “DB01\instance1” hosted on a windows 2012 server x64 with 6G Ram and 4 Core processor. “DB01” has max memory set to 6G ram and “DB01\Instance1” max memory is set to 6G Ram. Based on several forums I have read, both instances would be trying to use the full 6G ram and be in a split usage situation?

Thanks.

Yes, both instances would be constantly growing/shrinking memory usage to respond to the others memory pressure occurring. if you have 6GB total memory, you need to reserve some for the OS and other memory allocations, so you’d really be somewhere around 4-4.5GB max that you’d configure for max server memory, split however makes sense between the two instances to not have memory pressure issues. Otherwise the two instances are always going to be contending for memory against each other and the OS, as you currently have it setup.

Is it acceptable to have an occasional low memory condition? For example – if DB Engine and Analysis Services are running on the same server dedicated to a data warehouse and ETL operations?

I assume working memory is server memory – 4GB for OS, etc. I set max memory on the database engine to what is left over after minimal analysis services memory so while the expensive E and T parts of the ETL are running in the engine and analysis services is idle the engine is using the most memory it can. When it is time to Load then analysis services needs that memory and the engine should release memory (which according to your TSQL above, it is a couple times per day) and let analysis services start using it?

Hey Joel,

For systems that run BI in a box like you describe, I usually change the cube processing job and add a step before and after the cube processing runs to reduce max server memory in SQL before processing the cube to free the memory up for SSAS to use and then I reset it back to the normal value after the processing completes so SQL Server will use it again. This causes a one time flush of the internal caches and limits SQL Server from having to respond multiple times to memory pressure externally in the OS as SSAS grows it’s memory.

Friends,

We were always under impression to use 70% to 80 % of the total memory on the sever to be dedicated to SQL Server.

Also a doubt, the calculation mentioned above would be valid after the SQL Server is LIVE in production, but when we have a NEW SQL server, how to properly configure SQL Server’s Max and Min Settings?

Thanks

The calculation above is a base to start from on any new server. Then you should monitor as suggested in the blog post and increase max server memory based on what available memory remains to maximize the usage and configuration.

Thanks so much, Jonathan. I used your suggestion to create the following PowerShell script

$server = New-Object Microsoft.SqlServer.Management.Smo.Server "sqlservername\instance"$maxmem = $server.Configuration.MaxServerMemory

if ($maxmem.Maximum -eq $maxmem.ConfigValue) {

$reserve = 1

$totalMemory = $server.PhysicalMemory

if ($totalMemory -ge 4096) {

$currentCount = $totalMemory

while ($currentCount/4096 -gt 0) {

if ($currentCount -gt 16384) {

$reserve += 1

$currentCount += -8192

} else {

$reserve += 1

$currentCount += -4096

}

}

}

$sqlmax = [int]($totalMemory-($reserve*1024))

$maxmem.ConfigValue = $sqlmax

$server.Configuration.Alter()

}

I’ll probably be adding a few more to my SMO admin recipe page at https://blog.netnerds.net/smo-recipes/administration/ based off of the suggestions from your book. All credited, of course.

Thanks again!

Chrissy

Hi Jonathon, is the max server memory the amount of memory allocated to the buffer pool on all versions of SQL Server ?

The buffer pool is allocated from the max server memory configured value, but on 2012+ it also has other pools and memory allocations under max server memory.

Hi Jonathon,

Thanks for the article. A couple of follow-up questions:

Does the SQLOS Resource Monitor thread detect the low memory notification synchronously? If not, how often does the Resource Monitor check for low OS memory? Is it schedule-based? Event driven?

How bad is this? Is it an “expensive” activity, either from the OS perspective or the SQL Server perspective?

It is a separate thread subscribing to an OS notification so it has minimal performance overhead.

Jonathan,

I constantly reference this post as a starting point for my SQL Server memory configurations and I greatly appreciate it. Two questions have come to mind which I would love your thoughts on.

1) As we get to larger & larger amounts of RAM in a server, is their a point where the formula needs to change? For instance at 256GB of RAM, not unheard of these days there would be about 51 GB of RAM for Windows & other overhead, not a bad thing but I am also not sure that Windows needs quite that much, what are your thoughts? I do not have the environment to really test in, otherwise I would.

2) Given that the MAX Memory setting becomes the restriction for all sqlserv.exe memory usage starting with SQL Server 2012, how does that impact the calculation? It makes me think we can set MAX Memory a little higher to start especially as RAM amounts increase, as there is less memory use that is not restricted by the MAX Memory setting.

Thank you for your thoughts on this two questions!

Peter

Hey Peter,

For #1, from my experience it is often easier for people to see that there is Available Memory after the system has been up and running than it is to know they set max server memory to high, and memory pressure exists that causes cache store clock hand sweeps and memory to be returned to the OS, so I would prefer to set max server memory conservatively and then follow the advice I provide in the book and monitor Available MBytes and increase up from the initial value. You will find that even with 1TB of RAM it’s not leaving a whole lot of unused memory available after ramp up completes. It takes memory to map the memory allocations in the Kernel.

For #2, max server memory is not the absolute maximum in SQL Server 2012, 2014 or even 2016. It covers a larger portion of the memory requirements but it doesn’t cover all of them and you will still continue to see SQL Server using more than max server memory one every version after 2012. VAS allocations (SQLCLR is one of those) and the memory for the thread stacks (2MB per thread created) are both examples of things out of the max server memory value in 2012+.

Thank you for the explanation and the quick reply as well as the clarification on MAX Memory. I have not looked in any way, shape or form as of yet, is there any documentation on what MAX Memory covers and what it does not?

Thanks again!

Hey Jonathan,

Great post – I know it’s old, but still as relevant as ever. I have a question on a particular scenario I have:

I have 2 SQL 2016 named instances and a SQL 2012 instance running on a Windows 2012 Server with 32 cores and 512 GB of memory. The memory allocations are as follows:

SQL 2016 SP1 Instance 1 – 200 GB

SQL 2016 SP1 Instance 2 – 100 GB

SQL 2012 SP3 Instance – 100 GB

We still see events like this “A significant part of sql server process memory has been paged out. This may result in a performance degradation. Duration: 0 seconds. Working set (KB): 2408, committed (KB): 188956868, memory utilization: 0%%.” and server becomes unresponsive for hours, days on end, culminating in a reboot. During this slow period, task manager and perfmon still show plenty of free memory, but the server overall is extremely slow. To me, it looks like a memory leak or a memory mapping issue somewhere, but I would be interested in what you think.

I think you should have lock pages in memory set for the service accounts. https://www.red-gate.com/simple-talk/sql/database-administration/great-sql-server-debates-lock-pages-in-memory/