[NOTE: Update 12 Nov 2014. The most recent bug (KB article KB2965069) is THANKFULLY very unlikely for most environments (mentioned below). As a result, I’m glad to say that we can use OPTION (RECOMPILE) as a much easier (and safer) solution. So, while I’ll still leave solution 3 as an option if you run into troubles with OPTION (RECOMPILE), I can luckily say that if you’re on the latest SP/CU – the problems are incredibly unlikely. The BEST hybrid solution is shown below as solution 2.]

In my SQL PASS Summit 2014 session last week, I spoke about building high performance stored procedures and properly dealing with parameter sniffing problems… if you’re interested, the session was recorded for PASStv and you can watch it here.

UPDATE October 2020: Recently, I gave this session for a PASS DBA Virtual Chapter (September 2020) and you can view this through the PASS site (PASS.org / Learn (drop-down menu) / All Recordings / Filter for Tripp). The first in the list will be the most recent delivery. I did a long demo explaining Code Coverage testing vs. Plan Stability testing. This is a great session to have your developers watch! Then, for more detail: consider the Pluralsight courses on Optimizing Procedural Code (Part 1 and Part 2). Thanks!

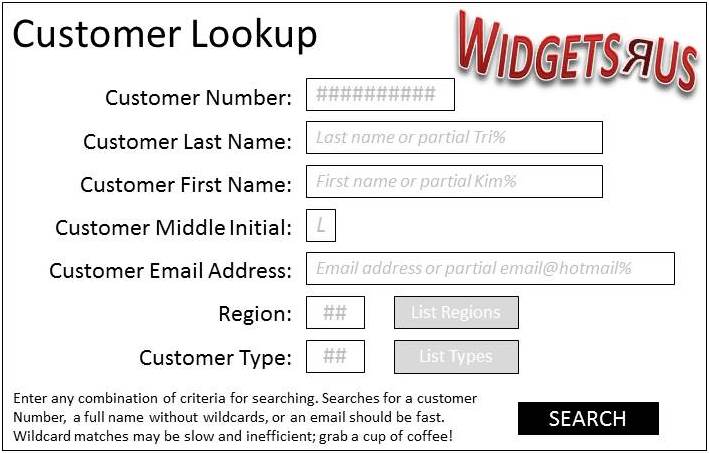

It all ties to something incredibly common in development – I call it the multipurpose procedure. Simply put, it’s one application dialog with many possible options and then sitting behind it – ONE stored procedure. This one stored procedure is supposed to handle every possible case of execution with multiple parameters. For example, imagine a dialog to search for a customer.

The user can enter in any combination of these elements and the procedure’s header looks like this:

CREATE PROC [dbo].[GetCustomerInformation] ( @CustomerID INT = NULL , @LastName VARCHAR (30) = NULL , @FirstName VARCHAR (30) = NULL , @MiddleInitial CHAR(1) = NULL , @EmailAddress VARCHAR(128) = NULL , @Region_no TINYINT = NULL , @Cust_code TINYINT = NULL ) ...And then the procedure’s main WHERE clause looks like this:

WHERE ([C].[CustomerID] = @CustomerID OR @CustomerID IS NULL) AND ([C].[lastname] LIKE @LastName OR @LastName IS NULL) AND ([C].[firstname] LIKE @FirstName OR @FirstName IS NULL) AND ([C].[middleinitial] = @MiddleInitial OR @MiddleInitial IS NULL) AND ([C].[EmailAddress] LIKE @EmailAddress OR @EmailAddress IS NULL) AND ([C].[region_no] = @Region_no OR @Region_no IS NULL) AND ([C].[cust_code] = @Cust_code OR @Cust_code IS NULL)GOOr, possibly like this:

WHERE [C].[CustomerID] = COALESCE(@CustomerID, [C].[CustomerID]) AND [C].[lastname] LIKE COALESCE(@lastname, [C].[lastname]) AND [C].[firstname] LIKE COALESCE(@firstname, [C].[firstname]) AND [C].[middleinitial] = COALESCE(@MiddleInitial, [C].[middleinitial]) AND [C].[Email] LIKE COALESCE(@EmailAddress, [C].[Email]) AND [C].[region_no] = COALESCE(@Region_no, [C].[region_no]) AND [C].[cust_code] = COALESCE(@Cust_code, [C].[Cust_code]);Or, maybe even like this:

WHERE [C].[CustomerID] = CASE WHEN @CustomerID IS NULL THEN [C].[CustomerID] ELSE @CustomerID END AND [C].[lastname] LIKE CASE WHEN @lastname IS NULL THEN [C].[lastname] ELSE @lastname END AND [C].[firstname] LIKE CASE WHEN @firstname IS NULL THEN [C].[firstname] ELSE @firstname END AND [C].[middleinitial] = CASE WHEN @MiddleInitial IS NULL THEN [C].[middleinitial] ELSE @MiddleInitial END AND [C].[Email] LIKE CASE WHEN @EmailAddress IS NULL THEN [C].[Email] ELSE @EmailAddress END AND [C].[region_no] = CASE WHEN @Region_no IS NULL THEN [C].[region_no] ELSE @Region_no END AND [C].[cust_code] = CASE WHEN @Cust_code IS NULL THEN [C].[cust_code] ELSE @Cust_code ENDBut, no matter which of these it looks like – they’re all going to perform horribly. An OSFA procedure does not optimize well and the end result is that you’ll get one plan in cache. If that was generated from a different combination of parameters than the ones you’re executing, you might get an absolutely abysmal plan. The concept is fairly simple – when a procedure executes and there isn’t already a plan in cache (for that procedure), then SQL Server has to generate one. To do so it “sniffs” the input parameters and optimizes based on the parameters sniffed. This is the plan that gets stored with the procedure and saved for subsequent executions. Depending on your workload characteristics, you might end up with a very common plan in cache and other users also executing the common parameters might see reasonable performance (especially if the WHERE does NOT use LIKE and has nothing but equality-based criteria [those aren’t quite as bad as this one]). But, there will still be cases where an atypical execution performs horribly.

And, unfortunately, sometimes the worst combination happens – a very atypical execution is the one that gets sniffed and then everyone suffers.

So, the admin might update statistics or force a recompile (or, even restart SQL Server) to try and get around this problem. These things “fix” the immediate problem by kicking the plan out of cache but they are NOT A SOLUTION.

Solution 1: The Simple Solution – OPTION (RECOMPILE)

Since SQL Server 2005, we’ve had an option to add OPTION (RECOMPILE) to the offending statement. When OPTION (RECOMPILE) works – it works incredibly well. However, there have been bugs and you’ll want to make sure that you’re on the latest SP or CU. It’s definitely had a bit of a checkered past. Here’s a list of issues and links to KB articles / bugs around this problem:

- KB2965069 FIX: Incorrect result when you execute a query that uses WITH RECOMPILE option in SQL Server 2012 or SQL Server 2014. Fixed in:

- Cumulative Update 4 for SQL Server 2014

- Cumulative Update 2 for SQL Server 2012 SP2

- Cumulative Update 11 for SQL Server 2012 SP1

- KB968693 FIX: A query that uses parameters and the RECOMPILE option returns incorrect results when you run the query in multiple connections concurrently in SQL Server 2008. Fixed in:

- SQL Server 2008 CU4

- SQL Server 2008 R2 CU1

- I don’t have a KB article link for this one but in both SQL Server 2008 and SQL Server 2008 R2 there are versions where some performance related features were unable. For example, filtered indexes could not be used inside of stored procedures even if you used OPTION (RECOMPILE) because of changes with the behavior or bugs. So, I’d fall back on using DSE (shown by solution 3).

Anyway, despite these issues (the first one listed above – is INCREDIBLY unlikely), I LOVE the simplicity of OPTION(RECOMPILE) because all you have to do is change the offending statement like this:

SELECT ... FROM ... WHERE ... OPTION (RECOMPILE);The reason I love this is for the simplicity of the statement. The reason that I have an issue with just doing this as a standard is two-fold:

- First, if you do this too often (or, for extremely frequently executed procedures), you might end up using too much CPU.

- Second, I’ve seen some environments where this is used to fix ONE problem and then it becomes a standard for ALL problems. This DEFINITELY should NOT become a standard coding practice. You only want to use this if your testing shows parameter sniffing problems and plan unstability. Stored procedures that have stable plans should be compiled, saved, and reused.

A better solution is one where you recompile for unstable plans but for stable plans – you place those in cache for reuse.

A Hybrid Solution – recompile when unstable, cache when stable

My main problem with adding OPTION (RECOMPILE) to many statements is that it can be costly to do when you’re executing thousands of procedures over and over again. And, if you really think about it, not all combinations really need to be recompiled.

For example, take an easier combination of just 3 parameters: CustomerID, Lastname, and Firstname. The possible combinations for execution will be:

- CustomerID alone

- Lastname alone

- Firstname alone

- CustomerID and Lastname together

- CustomerID and Firstname together

- Firstname and Lastname together

- Or, all three – CustomerID, Lastname, and Firstname

My questions for you to consider are:

- Are all of those going to want the same execution plan?

- And, what if they supply wildcards?

The most important consideration is that ANY query that submits CustomerID should NOT be recompiled, right? Think about it – if someone says I want to search for all of the people that have an ‘e’ in their last name (Lastname LIKE ‘%e%’) AND that have an ‘a’ in their first name (Firstname LIKE ‘%a%’) AND that have a CustomerID of 123456789 then all of a sudden this becomes an incredibly simple query. We’re going to go an lookup CustomerID 123456789 and then check to see if they have an ‘a’ in their first name and an ‘e’ in their last name. But, the lookup is incredibly simple. So, ANY execution where CustomerID is supplied should NOT be recompiled; the plan for those executions is STABLE. However, Lastname and Firstname searches might be highly unstable – especially if there’s a leading wildcard. So, if wildcard is present then let’s recompile if they don’t supply at least 3 characters for BOTH the first name and last name – preceding the wildcard character. And, it’s also OK if they don’t supply a wildcard at all. Then, the overall number of recompilations will be much lower – we’ll save CPU and we’ll get better performance by using a lot of STABLE, precompiled plans.

To do this, there are TWO versions. Solution 2 uses OPTION (RECOMPILE) strategically. Solution 3 using dynamic string execution instead.

Solution 2 – recompile when unstable [by adding OPTION (RECOMPILE)], cache when stable (using sp_executesql)

There are absolutely some criteria when plan stability can be predicted. For these, we will build the statement to concatenate only the non-NULL parameters and then execute normally using sp_executesql. For where it’s unknown or unstable, we’ll recompile by OPTION (RECOMPILE) to the statement. For all executions, use sp_executesql. And, of course, this is ONLY substitution for parameters. And, this should only be done for the most frequently executed procedures. You don’t have to do this for every procedure but it’s extremely beneficial for things that are heavily executed.

---------------------------------------------- -- Solution 2 ---------------------------------------------- CREATE PROC [dbo].[GetCustomerInformation] ( @CustomerID BIGINT = NULL , @LastName VARCHAR (30) = NULL , @FirstName VARCHAR (30) = NULL , @MiddleInitial CHAR(1) = NULL , @EmailAddress VARCHAR(128) = NULL , @Region_no TINYINT = NULL , @Member_code TINYINT = NULL ) AS IF (@CustomerID IS NULL AND @LastName IS NULL AND @FirstName IS NULL AND @MiddleInitial IS NULL AND @EmailAddress IS NULL AND @Region_no IS NULL AND @Member_code IS NULL) BEGIN RAISERROR ('You must supply at least one parameter.', 16, -1); RETURN; END; DECLARE @ExecStr NVARCHAR (4000), @Recompile BIT = 1; SELECT @ExecStr = N'SELECT COUNT(*) FROM [dbo].[Customers] AS [C] WHERE 1=1'; IF @CustomerID IS NOT NULL SELECT @ExecStr = @ExecStr + N' AND [C].[CustomerID] = @CustID'; IF @LastName IS NOT NULL SELECT @ExecStr = @ExecStr + N' AND [C].[LastName] LIKE @LName'; IF @FirstName IS NOT NULL SELECT @ExecStr = @ExecStr + N' AND [C].[Firstname] LIKE @FName'; IF @MiddleInitial IS NOT NULL SELECT @ExecStr = @ExecStr + N' AND [C].[MiddleInitial] = @MI'; IF @EmailAddress IS NOT NULL SELECT @ExecStr = @ExecStr + N' AND [C].[EmailAddress] LIKE @Email'; IF @Region_no IS NOT NULL SELECT @ExecStr = @ExecStr + N' AND [C].[Region_no] = @RegionNo'; IF @Member_code IS NOT NULL SELECT @ExecStr = @ExecStr + N' AND [C].[Member_code] = @MemberCode'; -- These are highly limited sets IF (@CustomerID IS NOT NULL) SET @Recompile = 0 IF (PATINDEX('%[%_?]%', @LastName) >= 4 OR PATINDEX('%[%_?]%', @LastName) = 0) AND (PATINDEX('%[%_?]%', @FirstName) >= 4 OR PATINDEX('%[%_?]%', @FirstName) = 0) SET @Recompile = 0 IF (PATINDEX('%[%_?]%', @EmailAddress) >= 4 OR PATINDEX('%[%_?]%', @EmailAddress) = 0) SET @Recompile = 0 IF @Recompile = 1 BEGIN --SELECT @ExecStr, @Lastname, @Firstname, @CustomerID; SELECT @ExecStr = @ExecStr + N' OPTION(RECOMPILE)'; END; EXEC [sp_executesql] @ExecStr , N'@CustID bigint, @LName varchar(30), @FName varchar(30) , @MI char(1), @Email varchar(128), @RegionNo tinyint , @MemberCode tinyint' , @CustID = @CustomerID , @LName = @LastName , @FName = @FirstName , @MI = @MiddleInitial , @Email = @EmailAddress , @RegionNo = @Region_no , @MemberCode = @Member_code; GOSolution 2 is the preferred and best method to create a balance where you don’t just recompile every time. This will give you better long-term scalability.

Solution 3 – recompile when unstable [by using DSE], cache when stable (using sp_executesql)

In the past, if I’ve ever run into problems with OPTION (RECOMPILE), I always consider (albeit, not lightly) rewriting the statement using a dynamic string instead; this always works! But, yes, it’s a pain to write. It’s a pain to troubleshoot. And, above all, yes, you have to be careful of SQL injection. There are ways to reduce and even eliminate the potential for problems (and, not all DSE can). Above all, you do need to make sure you have clean input (limiting only to valid input for the type of column such as characters for names and emails, etc.). Check out this blog post if you want more information Little Bobby Tables, SQL Injection and EXECUTE AS.

Based on that blog post, the solution to this problem is the following code.

-- To reduce the potential for SQL injection, use loginless -- users. Check out the "Little Bobby Tables" blog post for -- more information on what I'm doing in this code / execution -- to reduce the potential surface area of the DSE here. CREATE USER [User_GetCustomerInformation] WITHOUT LOGIN; GO GRANT SELECT ON [dbo].[Customers] TO [User_GetCustomerInformation]; GO -- You'll need this if you want to review the showplan -- for these executions. GRANT SHOWPLAN TO [User_GetCustomerInformation]; GO ---------------------------------------------- -- Solution 3 ---------------------------------------------- CREATE PROC [dbo].[GetCustomerInformation] ( @CustomerID BIGINT = NULL , @LastName VARCHAR (30) = NULL , @FirstName VARCHAR (30) = NULL , @MiddleInitial CHAR(1) = NULL , @EmailAddress VARCHAR(128) = NULL , @Region_no TINYINT = NULL , @Cust_code TINYINT = NULL ) WITH EXECUTE AS N'User_GetCustomerInformation' AS IF (@CustomerID IS NULL AND @LastName IS NULL AND @FirstName IS NULL AND @MiddleInitial IS NULL AND @EmailAddress IS NULL AND @Region_no IS NULL AND @Cust_code IS NULL) BEGIN RAISERROR ('You must supply at least one parameter.', 16, -1); RETURN; END; DECLARE @spexecutesqlStr NVARCHAR (4000), @ExecStr NVARCHAR (4000), @Recompile BIT = 1; SELECT @spexecutesqlStr = N'SELECT * FROM [dbo].[Customers] AS [C] WHERE 1=1'; SELECT @ExecStr = N'SELECT * FROM [dbo].[Customers] AS [C] WHERE 1=1'; IF @CustomerID IS NOT NULL BEGIN SELECT @spexecutesqlStr = @spexecutesqlStr + N' AND [C].[CustomerID] = @CustID'; SELECT @ExecStr = @ExecStr + N' AND [C].[CustomerID] = CONVERT(BIGINT, ' + CONVERT(NVARCHAR(30),@CustomerID) + N')'; END IF @LastName IS NOT NULL BEGIN SELECT @spexecutesqlStr = @spexecutesqlStr + N' AND [C].[LastName] LIKE @LName'; SELECT @ExecStr = @ExecStr + N' AND [C].[LastName] LIKE CONVERT(VARCHAR(30),' + QUOTENAME(@LastName, '''''') + N')'; END IF @FirstName IS NOT NULL BEGIN SELECT @spexecutesqlStr = @spexecutesqlStr + N' AND [C].[Firstname] LIKE @FName'; SELECT @ExecStr = @ExecStr + N' AND [C].[FirstName] LIKE CONVERT(VARCHAR(30),' + QUOTENAME(@FirstName, '''''') + N')'; END IF @MiddleInitial IS NOT NULL BEGIN SELECT @spexecutesqlStr = @spexecutesqlStr + N' AND [C].[MiddleInitial] = @MI'; SELECT @ExecStr = @ExecStr + N' AND [C].[MiddleInitial] = CONVERT(CHAR(1), ' + QUOTENAME(@MiddleInitial, '''''') + N')'; END IF @EmailAddress IS NOT NULL BEGIN SELECT @spexecutesqlStr = @spexecutesqlStr + N' AND [C].[EmailAddress] LIKE @Email'; SELECT @ExecStr = @ExecStr + N' AND [C].[EmailAddress] LIKE CONVERT(VARCHAR(128), ' + QUOTENAME(@EmailAddress, '''''') + N')'; END IF @Region_no IS NOT NULL BEGIN SELECT @spexecutesqlStr = @spexecutesqlStr + N' AND [C].[Region_no] = @RegionNo'; SELECT @ExecStr = @ExecStr + N' AND [C].[Region_no] = CONVERT(TINYINT, ' + CONVERT(NVARCHAR(5),@Region_no) + N')'; END IF @Cust_code IS NOT NULL BEGIN SELECT @spexecutesqlStr = @spexecutesqlStr + N' AND [C].[Cust_code] = @MemberCode'; SELECT @ExecStr = @ExecStr + N' AND [C].[Cust_code] = CONVERT(TINYINT, ' + CONVERT(NVARCHAR(5), @Cust_code) + N')'; END -- These are highly limited sets IF (@CustomerID IS NOT NULL) SET @Recompile = 0 IF (PATINDEX('%[%_]%', @LastName) >= 4 OR PATINDEX('%[%_]%', @LastName) = 0) AND (PATINDEX('%[%_]%', @FirstName) >= 4 OR PATINDEX('%[%_]%', @FirstName) = 0) SET @Recompile = 0 IF (PATINDEX('%[%_]%', @EmailAddress) >= 4 OR PATINDEX('%[%_]%', @EmailAddress) = 0) SET @Recompile = 0 IF @Recompile = 1 BEGIN -- Use this next line for testing -- SELECT @ExecStr -- For testing EXEC (@ExecStr); END ELSE BEGIN -- Use this next line for testing -- SELECT @spexecutesqlStr, @Lastname, @Firstname, @CustomerID; EXEC [sp_executesql] @spexecutesqlStr , N'@CustID bigint, @LName varchar(30), @FName varchar(30) , @MI char(1), @Email varchar(128), @RegionNo tinyint , @CustomerCode tinyint' , @CustID = @CustomerID , @LName = @LastName , @FName = @FirstName , @MI = @MiddleInitial , @Email = @EmailAddress , @RegionNo = @Region_no , @CustomerCode = @Cust_code; END; GOThis solution should NOT be used as a standard. But, it is an option for handling some cases where OPTION (RECOMPILE) isn’t giving you the plans / performance that you need. This solution is more complicated for developers to code. But, I’m not saying you have to do this for absolutely everything. Just work through the most critical procedures that are causing you the most grief.

The RIGHT way to handle multipurpose procedures

The hybrid solution (solution 2) is more complicated for developers to code. Remember, I’m not saying you have to do this for absolutely everything. Just work through the most critical procedures that are causing you the most grief (because they’re the most heavily executed procedures). The hybrid solution really gives you the best of all worlds here:

- It reduces CPU by only recompiling for unstable combinations

- It allows every combination executed with sp_executesql to get its own plan (rather than one per procedure)

- It allows stable plans to be put in cache and reused for subsequent executions (which reduces your impact to CPU and gives you long-term scalability)

Above all, you need to test that this works as expected but not only will you get better plans but you’ll also scale better!

Cheers,

kt

For more information

- Check out my PASS presentation DBA-313 here.

- Check out my Pluralsight course: SQL Server: Optimizing Ad Hoc Statement Performance here.

- Check out my Pluralsight course: SQL Server: Optimizing Stored Procedure Performance here.

- Check out my Pluralsight course: SQL Server: Optimizing Stored Procedure Performance, Part 2 (focused on session settings and further troubleshooting tips/tricks) here.

- Check out our Immersion Event: IEPTO1: Immersion Event on Performance Tuning and Optimization – Part 1 here.

- Check out our Immersion Event: IEPTO2: Immersion Event on Performance Tuning and Optimization – Part 2 here.

39 thoughts on “Building High Performance Stored Procedures”

Awesome. As always.

Thanks Mike!

Thank you Kimberly for an excellent post, particularly in drawing attention to the issues with OPTION (RECOMPILE). Would I be right to say that this is primarily a problem with OLTP contexts where there is a high likelihood of concurrent executions with different parameters? It still looks a potentially useful tool for reporting or ETL queries where parameterisation is less complex, concurrent execution is highly unlikely and there is a significant difference in plan depending on parameter chosen.

Yes, we’re still verifying the full extent of the KB article and the repro of the bugs on newer versions. I’m hopeful that the problems are VERY limited and isolated (yes, the current expectation is that they are for more high-volume OLTP environments with a high amount of concurrent recompilations).

So, yes, the simplicity of OPTION (RECOMPILE) is certainly attractive. Really, there are three possible solutions and each one has pros / cons:

* Solution 1 is just to add OPTION (RECOMPILE) to the offending statement and recompile for every execution.

Pro – simple

Con – it recompiles every time and this can eat up more and more CPU

* Solution 2 is to build the statement to use sp_executesql (so that – at a minimum – you’d have one plan per non-NULL parameter combination). But, “the twist” is to programmatically determine recompilation with a flag tied to the stability of the parameters. You can default it to recompile BUT if there are any parameters that guarantee a simple / stable plan (something like CustomerID or EmailAddress or Lastname+Firstname [with at least 3 leading characters EACH]) THEN, you flip off the recompilation bit and just let sp_executesql put the plan in cache and reuse it. If @recompile = 1 then the statement has OPTION (RECOMPILE) added to the end of it. It ends up looking like this:

IF @Recompile = 1

SELECT @spexecutesqlStr = @spexecutesqlStr + N’ OPTION(RECOMPILE)’;

then, the sp_executesql statement

* Solution 3 is to build the same statement in parallel to the sp_executesql statement but for execution with EXEC (@str). With the EXEC (@str) version I don’t need to add OPTION (RECOMPILE) because the statement will become adhoc (and probably NOT safe) and therefore it will be recompiled. The end result here is that I just don’t have to worry about the potential for the OPTION (RECOMPILE) bug. But, it does make the statement more complicated and it can make things hard to troubleshoot. And, you have to be careful so that you don’t introduce possible SQLinjection potential by making sure that you protect these parameters. You want to make sure that you don’t just build any statement with any input.

So, this 3rd solution can be difficult and you have to be careful – yes. But, it ALWAYS works and it is not prone to any of the past (or, possibly future) bugs with OPTION (RECOMPILE). But, the jury is still out on the extent to which the bug reveals itself on 2012 and 2014. I’m working to learn more specific details myself as I haven’t personally seen it (I’ve only seen the past bugs and the NEW KB article that sounds eerily familiar and makes me somewhat nervous [again] of trusting OPTION (RECOMPILE)).

hth,

kt

Thanks for a great explanation of the issues involved with parameter sniffing, etc.

2 questions on your choice of datatypes for the @ExecStr and @spexecutesqlStr variables:

1) Why nvarchar(4000) for @ExecStr, when I believe EXEC natively takes varchar (up to 8000) and your parameters are mostly varchar.

2) Why limit @spexecutesqlStr to nvarchar(4000). I usually use nvarchar(MAX), just to make sure I don’t have an issue with truncating code inadvertently. I suppose if you are sure the underlying sproc will be less than 4000 characters it doesn’t matter, but it might give the impression that the most you could use is 4000 which isn’t true.

Great point. I wasn’t trying to imply a maximum. Really, it was only because I knew these particular strings were nowhere near even that limit. You can certainly go with n/varchar(max) instead and then you don’t have any limitations of string length. And, yes, I could have gone with varchar(max) because all of my parameters are varchar (and not nvarchar). Definitely wasn’t trying to imply a 4000 character / 8000 byte limit! I will update this so that my strings aren’t limited.

Cheers,

kt

Another option I’m particularly fond of is writing several stored procedures that handle the more commonly used subsets of queries. Then write a master sp that the clients use and internally EXEC sp_executesql the correct subset-handler… this way you get precompiled plans on all the specializations and the main proc is basically a trivial switch statement.

Yes, this can work too. The only concern is around conditional logic *inside* the main calling procedure. Any statement that SQL Server can optimize, it will. So, if you want to avoid statement optimization for parameters that are not intended for it – then you need to call subprocedures. But, yes, conditionally branching and executing can also work really well! I totally agree!

Cheers,

kt

Hi Kim,

Doesn’t really apply to the overall objective of this article, but I am trying to figure out how this search criteria for PATINDEX works? PATINDEX(‘%[%_?]%’, @LastName.. most importantly the [%_?] portion. Can you shed some light on this. Thanks

Hey there Victor – Actually, I’m surprised that no one else has asked! The square brackets say “this character as a literal” and then the % before and after symbolizes any other characters before or after. So, translating the entire string ‘%[%_?]%’ means:

any characters

then – specifically ONE of these characters: % OR _

then any characters.

In SQL Server, those are wildcard characters:

% is for a range of characters (0 to many)

_ is for exactly one character ( = 1 )

? is a place holder that some applications use and I don’t want that either… I have to admit, I don’t remember why I did this one originally but it really

So, I use that to make sure that the FIRST wildcard is at least n characters in.

Hope that helps!

kt

Yes, thanks

Thank you, thank you, thank you….

This was my #1 reason for attending IE2 in Bellevue this past June and I’ve been needing to refresh my memory with notes taken from the class… You just saved me a ton of work again… You guys rock.

I also have a new SQL dev starting and we have a new multipurpose search screen and I wanted to use these techniques right from the get go on it.

Absolutely. Pass this on to your developers as a second step after having tested that the procedures work (and that you have good code coverage). As my second pass, I like to test “plan stability” by testing a variety of different combinations of parameters WITH RECOMPILE. (where p1, p2, p3, p4, etc. are DIFFERENT but likely combinations)

EXEC proc p1, p2, p3, p4, p5, p6 WITH RECOMPILE

EXEC proc p1, p2, p3, p4, p5, p6 WITH RECOMPILE

EXEC proc p1, p2, p3, p4, p5, p6 WITH RECOMPILE

EXEC proc p1, p2, p3, p4, p5, p6 WITH RECOMPILE

Then, I check to see if the plans are the same. If they are then I know that the plan is stable*. If they’re not – then I have to look at doing something else!

*NOTE: There are some things that EXEC … WITH RECOMPILE does NOT do (where as OPTION (RECOMPILE) will). For example, it won’t allow the use of filtered indexes. My main point is that EXEC…WITH RECOMPILE is NOT perfect. There are some limitations. But, largely, this is still a good test.

Hope this helps!

k

Drukarnia powinna dysponowa_ sprz_tem, kt

Have you considered using inline table-valued user-defined functions for this purpose? The WHERE clause in the function SELECT statement can look like the examples you provided, but when you pass in NULL for certain parameters the filtering for those parameters is not included in the execution plan.

I have not done any testing / comparisons here but these are NOT apples to apples. Functions might be able to get a new plan for unstable combinations but stable combinations will never be cached. So, a lot more testing should be done to get a full comparison. I tend to prefer stored procedures over functions for a lot of reasons – most often related to performance.

k

I came across this post when reviewing the SQLSkills newsletter.

Erland Sommarskog did a very long article on using dynamic SQL in stored procedures here http://www.sommarskog.se/dynamic_sql.html which I have recommended around to oter developers, but I think this provides a more digestible variation.

Thank-you!

Yes, Erland has a number of great suggestions. A good resource for sure!

Thanks,

k

Hi Kimberly

re solution 2, this is the method i’ve been using when i’ve optional parameters(learned from Erland’s post) and my question is, you use recompile option and Erland’s version doesn’t. I tested couple of my existing procedures and based on my parameters the plan seem to change correctly and dont seem to have sniffing problem. am i missing something ?

is recompile is needed with solution 2 ? Thanks for the advise.

Hey there sqlpro – Actually, yes, that’s the entire point of solution 2. Using sp_executesql with dynamically constructed strings can still be prone to parameter sniffing problems. However, one big difference is that you’ll have one plan per statement generated. But, for any combinations that could be unstable, it’s critical to add OPTION (RECOMPILE).

Hope that helps!

k

Great article / video. Very thorough. Wish I could have seen it live because I have experienced those patterns a lot, and have fallen in the “we’ll just recompile” solution more than once.

But I know why many don’t use such a pattern (anymore) – and the culprit is ORM.

ORMs are a huge part of development these days, and that makes “us” (developers, SQL and non-SQL alike) very reliant on the more or less “fun” SQL that ORM engine will pop out.

And that makes developers who do the OSFA procedures do them in – for example Linq – and that makes the problem with parameter sniffing and plan cache – all the more tricky to solve.

I would think that developers that can be taught to use this smart pattern of dynamic SQL (without falling for injection issues) could also be taught to make the proper queries to begin with, lessening the need for OSFA procedures.

But alas, instead I’ll have to solve a ten+ union query and get that to work somewhat sensible due to ORMs :)

Agreed! Thanks for your comment Allan. And, have fun with that union. ;-)

k

I posted a very reasonable and relevant question/comment yesterday, but it appears to have been deleted. I’m curious about why it was deleted. The suggestion I offered (using a function) may accomplish the same goal without the significant drawbacks of using dynamic SQL.

Your comment was not deleted. But I had not yet approved it because I had not yet had time to do some TESTING to really see if it’s true without any downsides. If I’m being honest though, I’m actually a bit frustrated by how rude THIS comment is. First, you ASSUME that I’m doing something purposely deceitful. (Really? Why would I do that?) Second, you’re not using your real name.

You are more than welcome to write up a detailed blog post of how you think a function completely solves all of the problems here. However, I’m already certain that you’ll run into one issue and that’s that the statement of a multi-statement function runs as adhoc. You might get the benefit of a good plan but you’ll never get the benefit of a compiled plan for the parameter combinations that are stable. So, you might solve one part of the problem but not the other. And, I would have liked to have done some more testing to really see if these are apples to apples but instead I’ll just “approve” your comment so that you know that I didn’t delete it.

k

You said “So, let’s recompile if they don’t supply at least 3 characters for BOTH the first name and last name.” and the code is:

SET @Recompile = 1

IF (

-- User provided wildcard character and has at lease 3 non wildcard characters in front of it

PATINDEX('%[%_?]%', @LastName) >= 4

-- No wildcard characters provided

OR PATINDEX('%[%_?]%', @LastName) = 0

)

AND (

PATINDEX('%[%_?]%', @FirstName) >= 4

OR PATINDEX('%[%_?]%', @FirstName) = 0

)

SET @Recompile = 0

I’m a little confused about the logic. If @LastName is “ab” and @FirstName is “cd”, then this statement will set @Recompile to 0, which is not “recompile if they don’t supply at least 3 characters for BOTH the first name and the last name”, right?

I was a bit short in my full explanation there… I was describing the problems with wildcards and this was ensuring that there were at least 3 characters that precede a wildcard OR no wildcard at all. I’ve clarified this in the post.

And, it’s absolutely fine to not recompile for lastname = ‘ab’ and firstname = ‘cd’ because those are seekable values.

Thanks!

k

Thanks for this post, Kimberly.

Like another commenter wrote, this topic was one of my favorite parts of IE2 in Bellevue in June 2014.

I was fuzzy on some of some of details, so seeing it again was very useful.

It took me a moment to figure out why

WHERE 1=1is in the SQL string — I assume this is so that you don’t have to check whether you need anANDbefore appending to theWHEREclause?Yes, exactly. Creating the dummy WHERE 1=1 means I already have a WHERE clause and I don’t need to check to see if I need to add ONLY

AND col = stringvs.WHERE col = string.Cheers James!

k

Thanks for this post, Kimberly.

Please, how can i test plan stability in general.

Is there any solution/terminology to determine this ?

Yes! When you create the procedure AND test it… run through your code-coverage tests (as usual). Then, take EVERY scenario tested by your code coverage tests and run them WITH RECOMPILE.

— Scenario 1

exec procedure param, param, param, etc. WITH RECOMPILE

— Scenario 2

exec procedure param, param, param, etc. WITH RECOMPILE

— Scenario 3

exec procedure param, param, param, etc. WITH RECOMPILE

For A BUNCH of scenarios. IF the plans are different across a wide variety of scenarios then you know that your procedure is NOT stable!

Hope that helps!

k

I have come across parameter sniffing issue. I’ve defined local variables of each input parameter and assign parameter value onto corresponding local variable. It fixed the issue however, still sometime stored procedure runs slow on any set of parameter. Do you see any issue using local variable to tackle the parameter sniffing issue?

Thanks.

Using a variable to “obfuscate” (or mask/hide) a parameter means that SQL Server cannot sniff the value nor can it use the histogram. Instead, SQL Server must use the density_vector (which really gives them an “average” rather than a parameter-specific plan). This can often work when the parameters AND data are both focused on relatively consistently patterned data (ideally, even-distributed data). But, when they’re not – the average might not work. This is likely why you don’t ALWAYS see good performance. I’m a much bigger fan of recompiling and sniffing for values that need it and using an average plan (or a cached plan) for those that don’t. To get better performance, I’d use the strategy described by this blog post!

Hope that helps!

k

Thanks For Your Post.

What is means :

%[%_?]%

% is a wildcard for 0-many characters

_ is a wildcard for exactly ONE character

brackets [ or, anything WITHIN brackets ] is a “literal” so what it’s saying is specifically the CHARACTERS % and _

? is really an application wildcard and I don’t really need to use it here… I could remove that an make it just

So, %[%_]% translates into:

anything [then specifically % or _] anything

What I’m looking for is whether or not there are SPECIFICALLY supplied wildcard characters!

And, I’m also going to remove the ?

Cheers,

k

A qn. re. SP compilation process, which struck while listening to you presentation @ PASS on “Stored Procedure Optimization” @ Alaska …

On the slide about “Processing Stored Procedures”, you mention that if the SP references any temp object, than SQL Server does not proceed further on name resolution and defers that to runtime? I guess this is what BOL refers to as deferred name resolution.

However, is it correct to interpret the above as –

if a SP creates & then refers to a temp table, then during CREATE/ALTER, there will not be any name resolution happening, for any object referred in that SP?

Or, does it do and retains info. about name resolution (for permanent objects) until it first encounters a reference to a temp table?

I’m not sure, how this affects dependency info. that the catalog maintains, but I guess, in the case, it may not be up-to-date – is that correct?

Just couldn’t reconcile, thus seeking to get clarity; hence the question – hope that’s fine…

Thanks.

TSQLDev

If there are any temp objects then resolution is completely deferred to runtime!

Cheers,

k

Thank you for patience and replying and encouraging each question. I agree your tagline..Improving skills through our questions.Appreciated. I am recently doing sproc optimization to one of my client. I hope I will not run into new issues.. :)

I am reading your courses in PluralSight and YouTube video on Stored Procedure Optimization Techniques.

Thank you KT.

Thank you for the post, Kimberly! If sp_executesql stores plans for different combinations of parameters, why not use it in all the stored procedures? Won’t it help produce appropriate execution plans even in cases where Dynamic SQL is not needed?

Hey there Sam – It’s more that EACH version of the statement (regardless of the values of the parameters) is stored – and, just like procedures (prior to 2022) stored with the plan that’s determined from the first set / combination of values. It really works exactly the same way as stored procedures EXCEPT that you can build the statement dynamically so that you don’t let null parameters affect a plan. And, you end up with multiple versions of the correct statement – to each have a plan. But, when those parameters could affect the variations of data – you want to sp_executesql with option recompile so that that particular statement is not cached!

I hope that helps! Let me know if you want further clarification!

Cheers,

k