Query Store retains query performance data at the plan level. This data is then broken out into intervals of time, determined by the INTERVAL_LENGTH_MINUTES setting. The time intervals are found in the sys.query_store_runtime_stats_interval system view, and the start_time and end_time columns are of the DATETIMEOFFSET data type. This means that the date is time-zone aware, and in Query Store the data is stored as UTC. Now why does all of this matter? Because handling dates in Query Store is important if you’re going to query the data directly.

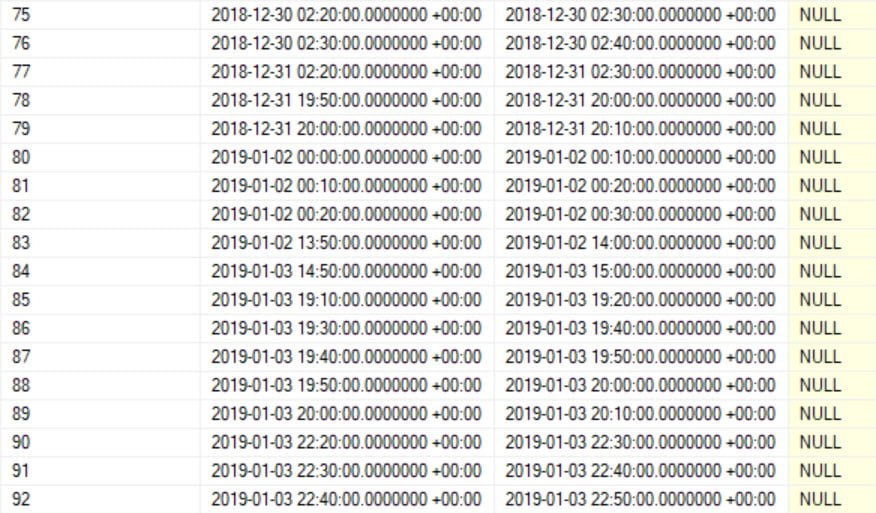

Here’s what we get with a simple query against the runtime stats interval view:

SELECT * FROM sys.query_store_runtime_stats_interval ORDER BY runtime_stats_interval_id; GO

These are 10 minute intervals and notice that they all have +00:00 for the offset, which is +/- UTC. If you want to display data based on the intervals, and convert it to your local timezone, you need to use AT TIME ZONE. We can use a variation of a query from my last post, Finding the Slowest Query in a Stored Procedure, to see this in action.

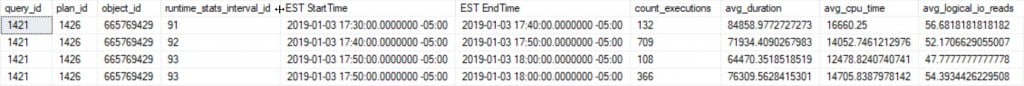

SELECT [qsq].[query_id], [qsp].[plan_id], [qsq].[object_id], [rs].[runtime_stats_interval_id], [rsi].[start_time] AT TIME ZONE 'Eastern Standard Time' [EST StartTime], [rsi].[end_time] AT TIME ZONE 'Eastern Standard Time' [EST EndTime], [rs].[count_executions], [rs].[avg_duration], [rs].[avg_cpu_time], [rs].[avg_logical_io_reads] FROM [sys].[query_store_query] [qsq] JOIN [sys].[query_store_query_text] [qst] ON [qsq].[query_text_id] = [qst].[query_text_id] JOIN [sys].[query_store_plan] [qsp] ON [qsq].[query_id] = [qsp].[query_id] JOIN [sys].[query_store_runtime_stats] [rs] ON [qsp].[plan_id] = [rs].[plan_id] JOIN [sys].[query_store_runtime_stats_interval] [rsi] ON [rs].[runtime_stats_interval_id] = [rsi].[runtime_stats_interval_id] WHERE [qsq].[query_id] = 1421 AND [qsp].[plan_id] = 1426 AND [rsi].[end_time] > DATEADD(HOUR, -1, GETUTCDATE()) ORDER BY [rs].[runtime_stats_interval_id]; GO

Since I’m in chilly Cleveland, OH, which is in the Eastern Time Zone, I’ve converted the data as such. If you’re not familiar with the exact time zone text you can use, query sys.time_zone_info. Note that DATETIMEOFFSET does not account for Daylight Savings Time, so you’ll have to handle that in your code (yay for writing this post in January).

Separate from displaying the date, you may want to query for a particular range, as I’ve done in the above query with AND [rsi].[end_time] > DATEADD(HOUR, -1, GETUTCDATE()). Notice that I’m using GETUTCDATE(), which is due to an interesting behavior with DATEADD which Aaron Bertrand has detailed in his post, Performance Surprises and Assumptions: DATEADD. There are other date fields you could use here, such as last_execution_time, and it, as well as every other date column in the Query Store system views, is DATETIMEOFFSET. Of note: last_execution_time exists in in sys.query_store_query, sys.query_store_plan, and sys.query_store_runtime_stats but they can and will be different between a query, its plan(s), and the runtime stats intervals.

Hopefully this helps the next time you’re writing queries against the Query Store system views!

One thought on “Handling Dates in Query Store”

You hv always been superb..

So it means when we are extracting reports manually we need to keep in time datetimeoffset columns while query store reports adjust them seleves.

Thank you