I am a HUGE fan of updating statistics as part of regular maintenance. In fact, if you don’t know if you have a step or job that updates out of statistics on a regular basis, go check now! This post will still be here when you get back 😊

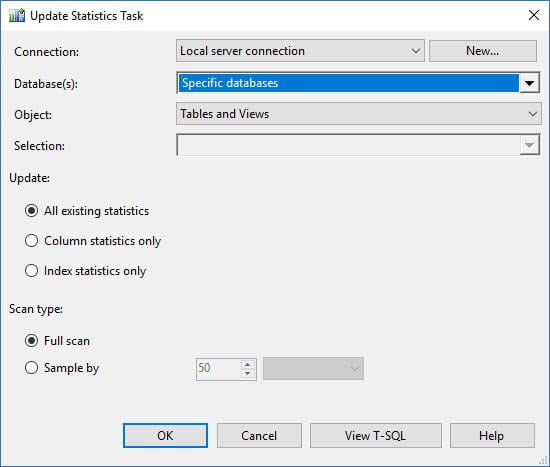

At any rate, for a long time the default options for updating statistics were pretty much a sledgehammer. Within the maintenance plan options, the Update Statistics Task only provides the option to update Index statistics, Column statistics, or both. You can also specify whether it is a full scan or a sample for the update, but that’s about it:

I don’t like this option because it means that statistics that have had little or no change will be updated. I could have a 10 million row table where only 1000 rows change, and yet the statistics for that table will update. This is a waste of resources. For a small database, or system that’s not 24×7, that isn’t such a big deal. But in a database with multiple 10 million row tables, it is a big deal.

The sp_updatestats command isn’t a favorite of mine either. I’ve written about that here, so I won’t re-hash it.

If you have used Ola Hallengren’s scripts for maintenance, you hopefully know that it will also update statistics using the @UpdateStatistics parameter. The default value for this is NULL, which means do not update statistics. To be clear, if you drop in Ola’s scripts and have it create the jobs for you, and then you start running the “IndexOptimize – USER_DATABASES” job, by default you’re not updating statistics. The code the IndexOptimize – USER_DATABASES job has, by default, is:

EXECUTE [dbo].[IndexOptimize] @Databases = 'USER_DATABASES', @LogToTable = 'Y'

If you want to have the job also update statistics, you need:

EXECUTE [dbo].[IndexOptimize] @Databases = 'USER_DATABASES', @UpdateStatistics = 'ALL', @LogToTable = 'Y'

With this variation, we are updating index and column statistics, which is great. But…we are updating them regardless of whether it’s needed. Statistic with no rows modified? Update it. Statistic with 10 rows modified? Update it.

There has always been an option to only update statistics that have changed, this is the @OnlyModifiedStatistics option, and this gets us behavior just like sp_updatestats.

EXECUTE [dbo].[IndexOptimize] @Databases = 'USER_DATABASES', @UpdateStatistics = 'ALL', @OnlyModifiedStatistics = 'Y', @LogToTable = 'Y'

With this option, if no rows have changed, the statistic will not be updated. If one or more rows have changed, the statistic will be updated.

Since the release of SP1 for 2012, this has been my only challenge with Ola’s scripts. In SQL Server 2008R2 SP2 and SQL Server 2012 SP1 they introduced the sys.dm_db_stats_properties DMV, which tracks modifications for each statistic. I have written custom scripts to use this information to determine if stats should be updated, which I’ve talked about here. Jonathan has also modified Ola’s script for a few of our customers to look at sys.dm_db_stats_properties to determine if enough data had changed to update stats, and a long time ago we had emailed Ola to ask if he could include an option to set a threshold. Good news, that option now exists!

Using Ola’s script to update statistics based on a threshold of change

With the IndexOptimize stored procedure Ola now includes the option of @StatisticsModificationLevel. You can use this to set a threshold for modifications, so that only statistics with a specific volume of change are updated. For example, if I want statistics updated if 5% of the data has changed, use:

EXECUTE [dbo].[IndexOptimize] @Databases = 'USER_DATABASES', @UpdateStatistics = 'ALL', @StatisticsModificationLevel= '5', @LogToTable = 'Y'

Take note: the option @OnlyModifiedStatistics option is not included here…you cannot use both options, it has to be one or the other.

This is great! I can further customize this for different tables. Consider a database that has a very volatile table, maybe dbo.OrderStatus, where auto-update may or may not kick in during the day, so I want to make sure stats are updated nightly:

EXECUTE [dbo].[IndexOptimize] @Databases = 'USER_DATABASES', @Indexes = 'ALL_INDEXES, -SalesDB.dbo.OrderStatus', @UpdateStatistics = 'ALL', @StatisticsModificationLevel= '10', @LogToTable = 'Y'

This will address fragmentation and update statistics for all tables in the SalesDB database except dbo.OrderStatus, and it will update statistics if 10% or more of the rows have changed.

I would then have a second job to address fragmentation and stats for OrderStatus:

EXECUTE [dbo].[IndexOptimize] @Databases = 'USER_DATABASES', @Indexes = 'SalesDB.dbo.OrderStatus', @UpdateStatistics = 'ALL', @StatisticsModificationLevel= '1', @LogToTable = 'Y'

For the dbo.OrderStatus table, statistics would be updated when only 1% of the data had changed.

I love the flexibility this provides!

You might be wondering why I chose 1%…take a close look at this important note which is included in Ola’s documentation:

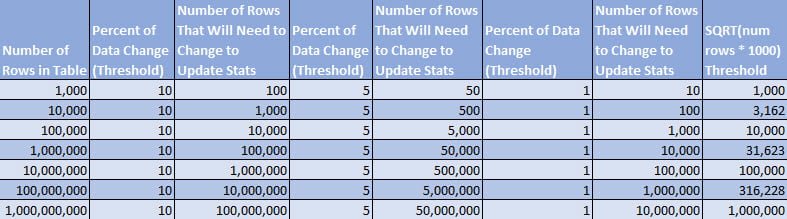

Statistics will also be updated when the number of modified rows has reached a decreasing, dynamic threshold, SQRT(number of rows * 1000)

This is critical to understand because if the threshold I have set for @StatisticsModificationLevel ends up having a number of rows HIGHER than the formula above, statistics will update sooner than I expect.

For example, if I have 1 million rows in a table and I have @StatisticsModificationLevel = 10, then 10% of the rows, or 100,000, have to change in order to update statistics. HOWEVER, if you plug 1 million into SQRT(1,000,000 * 1000), you get 31,623, which means Ola’s script will update statistics after 31,623 rows have changed…well before 100,000.

This may be important for some of you to understand in terms of these thresholds, so I dropped the information into a table to make it easier to comprehend (at least, it’s easier for me!).

Using my original example, if dbo.OrderStatus has about one million rows, then with 1% as the threshold, only 10,000 rows need to change before stats are updated. If the SQRT algorithm were used, over 30,000 rows would need to change before stats were updated, and depending on the data skew, that might be too high.

Understand that as tables get larger, statistics will likely be updated before the set percentage value is reached because the SQRT algorithm has a lower threshold. (Yes, I’m driving this point home.) Consider a table with 10 million rows. If I set the threshold to 5%, I would expect statistics to update after 500,000 modifications, but in fact they will update after 100,000.

If you’re wondering where the SQRT algorithm comes from, please review Microsoft’s Statistics documentation. This threshold was originally introduced with trace flag 2371 to lower the threshold for automatic updates. It is applied by default started in SQL Server 2016 when using compatibility level 130. My assumption is that Ola determined this was a good threshold to use as a fail-safe/catch-all for his script, and I think it was smart move on his part. In general, I’d rather have statistics update too often, rather than not often enough. However, using the new @StatisticsModificationLevel option gives us better control than we’ve had previously, unless we write a custom script (which is still an option…do what works best for you!).

22 thoughts on “Updating Statistics with Ola Hallengren’s Script”

Thanks Erin. Very informative and useful article – as always.

A big improvement for partitioned tables is that statistics update now executes at partition level – like rebuild and reorganize do.

… when statistics_incremental is turned on.

I’ve been using natural Log functions to the same effect as part of my home-built tools for identifying statistics that need updating.

About 5 years ago I switched to using the Hallengren solution for database maintenance and have pretty much discontinued my own scripts as they just work!

Thanks for explaining this particular feature of these scripts and the useful table.

Would love to see a filter for page count as well. I have to make a custom script currently because anything over 500k pages kills our read only nodes on our AG. Just a thought.

Chris-

There is a filter for page count @MaxNumberOfPages. This applies to the indexes, and you may have to get creative in how you use this (and possibly create multiple jobs), but you should be able to use this for stats as well.

Erin

This is great! Exactly what I need!

Thanks!

This is great, but there is one gotcha with this and that is that the persisted sample percent actually gets cleared out when rebuilding an index. There is a thread mentioning this issue:

Until the index rebuilding issue gets resolved, i would recommend to look into a job that catches the indexes that were rebuilt and set the persisted sample rate for those indexes after running the indexoptimize job.

Thanks for the note!

Hi Erin,

Just to give you an overview, we have around 10 SQL Servers, which vary from SQL 2000 To SQL 2016 and multiple databases are hosted on each SQL server. DBs are not used on these servers after hours and DB size is not too big. Current configuration on these servers are : Rebuilding Index task runs on every Sunday using in-house script and then has the Update Statistics job which runs on every Wednesday at 9:00 pm using the sp_updatestats command. Now I am thinking to switch to Ola’s Index optimize script.

Is this the best way to configure Ola’s IndexOptimize script to rebuild the Index and create statistics in the same task or would you configure it separately? One of the issues I see with the below approach is that it will update Index statistics again. Example as below.

Could you please advise?

EXECUTE dbo.IndexOptimize

@Databases = ‘USER_DATABASES’,

@FragmentationLow = NULL,

@FragmentationMedium = ‘INDEX_REORGANIZE’,

@FragmentationHigh = ‘INDEX_REBUILD_OFFLINE’,

@FragmentationLevel1 = 5,

@FragmentationLevel2 = 30,

@MinNumberOfPages = 1000,

@FillFactor = 80,

@StatisticsSample = 100,

@UpdateStatistics = ‘ALL’,

@OnlyModifiedStatistics = ‘Y’

@LogToTable = ‘Y’

See this. This will update Stat, and not index.

Set Null to @Fragmentation Low, Medium, High.

D. Update modified statistics on all user databases

EXECUTE dbo.IndexOptimize

@Databases = ‘USER_DATABASES’,

@FragmentationLow = NULL,

@FragmentationMedium = NULL,

@FragmentationHigh = NULL,

@UpdateStatistics = ‘ALL’,

@OnlyModifiedStatistics = ‘Y’

I don’t think the Auto-stats formula of SQRT(1000*number or rows) is a good formula at all. It will take STATS more frequently on larger tables, which is actually not what you usually want. Larger tables tend to have very good statistics that don’t need to be re-statted frequently over time. This formula goes totally against that assumption and you end up spending a LOT of time taking these stats just because a small percentage of rows changed on a very large table.

I thought the Ola change to include StatisticsModificationLevel was a good one, until I realized that he is using the exact same threshold to determine whether to take stats or not. Too bad we can not override this and simply use the Modification percentage by itself and not an OR situation with the autostats formula.

Robert-

You can override what Ola has…by changing his base stored procedure. We have done this for a couple of our customers who wanted a different threshold. You could also put in a request to Ola that he make the threshold customizable, but in the end, it’s T-SQL, so if you have the time to dig into that section of the code, I bet you can get it to do what you want.

Erin

@UpdateStatistics = ‘ALL’,

@StatisticsModificationLevel = say ’10’

if above options are used in ola’s index maint job, then if a index rebuild is done by it , will it again update the index stats?

It will not.

Hi Erin,

Thanks for this post it was a good read.

This may sound dumb but I have a small question re @StatisticsModificationLevel= ‘1’. I think the parameter is an integer value so why are you passing it single quotes.

Many thanks

Not sure, habit perhaps. Feel free to try it without.

I’ve a 2 TB database

And it already has about 5 tables ,each of 140 GB.

I used to rebuild indexes on fragmentation 1% on daily basis , and I’m sure rebuild index , update relevant stats of those indexes .

Then I update statistics using OLA for columns,and indexes on modification level 1 with full scan .

But when I look at statistics after the job rub, I find modification counter still have values of thousands…

I’m very unsure if this causes problems

Especially for those big tables with 500 million records …

Should I use another script to update statistics based on modification counter

What version of SQL Server are you running, what is the compat mode for the database, what version of Ola’s script are you running, and what exact commands are you running with Ola’s script?