(Happy Independence Day to everyone back home in the USA! We’re in Dublin for another week…)

Back at the end of April I conducted a survey of transaction log characteristics (see here) and now I present the results to you.

I had a great response to the survey from almost 100 people, and the total data set for these observations is 16841 databases on 847 SQL Server instances (SQL Server Edition is irrelevant).

This isn’t the most exciting blog post, but I at least found the numbers interesting – hope you do too! I’ve got some pointers in the summary to log management blog posts.

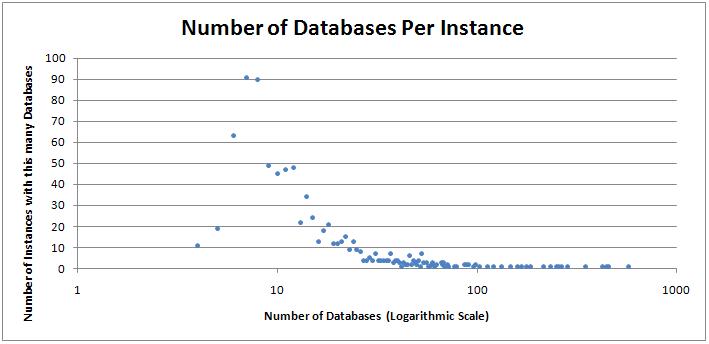

Number of Databases Per Instance

Each plot on the graph below is a count of the number of instances having that many databases, including the four system databases.

Just under 75% of instances have 20 or fewer databases (including system databases). The highest number per instance is 571.

Number of Log Files Per Database

This wasn’t worth plotting. The results are:

Log Files Number of Databases 1 16657 2 158 3 9 4 8 5 1 6 4 7 1 24 1

I’m impressed by this – I expected to see more databases with multiple log files. The one with 24 is tempdb – someone setting the number of log files to equal the number of processor cores I’d guess.

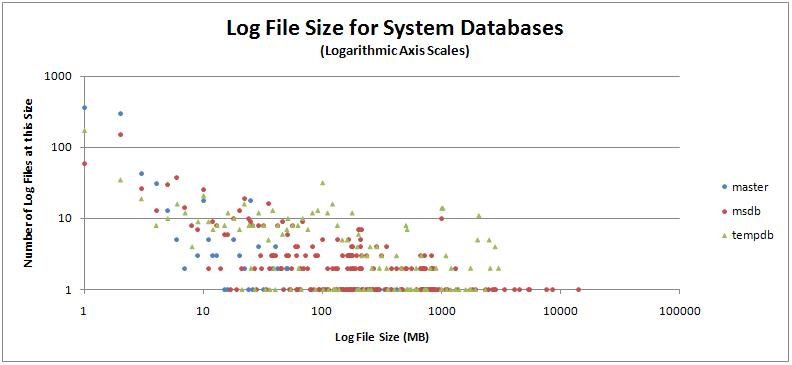

System Databases: Log File Size

Each plot on the graph below shows the number of log files of that size across all the instances, color-coded for master, msdb and tempdb.

89% of master database log files are less than 10MB (a number I arbitrarily chose as a comparison point).

Only 40% of msdb log files are less than 10MB. This would suggest that there are a high proportion of msdb databases not using the SIMPLE recovery model. However, only 5% of them aren’t using SIMPLE.

Only 33% of tempdb log files are less than 10MB. I would expect this number to be low.

Looking at the distribution on the graph, master log files (blue) are generally smaller, tempdb log files (green) are generally larger, with msdb (red) in the middle. Again, this is what I’d expect to see.

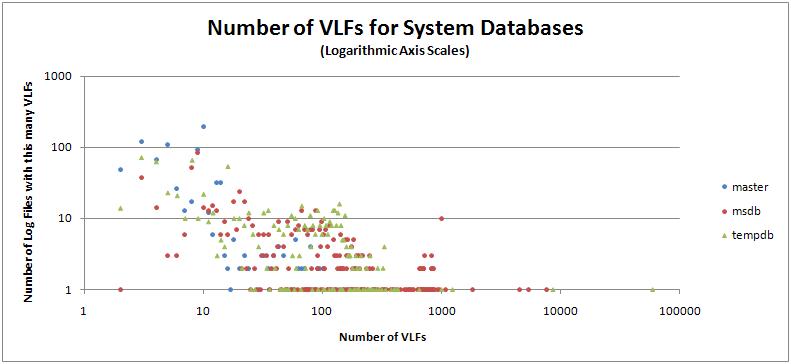

System Databases: Number of VLFs

Each plot on the graph below shows the number of log files with that many VLFs across all the instances, color-coded for master, msdb and tempdb. (If you’re unfamiliar with the term ‘VLFs’, see the TechNet Magazine article I link to at the bottom).

98.6% of all master log files have less than 100 VLFs (our arbitrary ‘you may have too many VLFs’ tipping point), with the maximum being 331.

Only 65% of all msdb log files have less than 100 VLFs. From the other 35%, only 5% of them aren’t using the SIMPLE recovery model. The maximum number of VLFs is 7646.

Surprisingly, 75% of tempdb log files have less than 100 VLFs. I expected this number to be higher in general, because of tempdb autogrowth. The maximum number of VLFs is 59708.

Looking at the graph, master (blue) is as I expected, but msdb (red) and tempdb (green) are the opposite of what I expected – with more msdb log files having more VLFs.

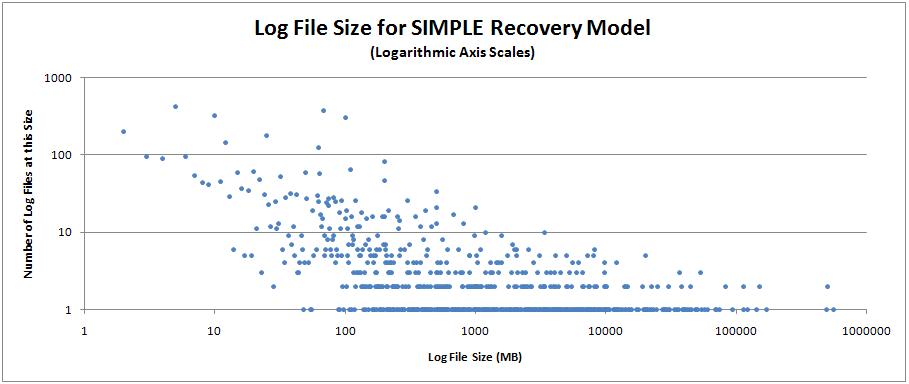

User Databases: Log File Size

Out of all 13451 user databases in the sample:

- 6797 use the SIMPLE recovery model (50.5%)

- 177 use the BULK_LOGGED recovery model (1.3%)

- 6477 use the FULL recovery model (48.2%)

This in itself is surprising – I expected to see a higher proportion of FULL recovery model databases.

Given that the log file behavior is the same for FULL and BULK_LOGGED recovery models, and as so few databases use the BULK_LOGGED recovery model, I lumped the FULL and BULK_LOGGED databases together for analysis.

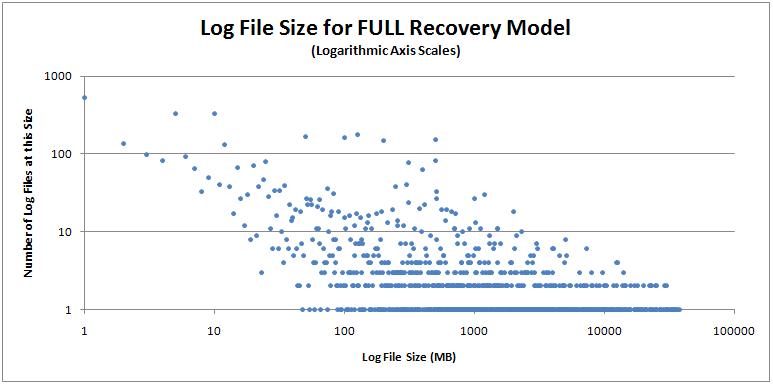

Each plot on the graphs below shows the number of log files of a certain size across all the instances.

The graphs above have different sizes because more databases in the SIMPLE recovery model are larger sized. The overall distribution looks very similar though.

90.7% of user databases using the SIMPLE recovery model have a log file size less than 1GB, compared with 83.0% for non-SIMPLE user databases.

98.2% of SIMPLE user databases have a log file size less than 10GB, compared with 96.8% for non-SIMPLE user databases.

The maximum size of a log file for a SIMPLE user database is 1020GB, dropping to 326GB for a non-SIMPLE user database.

The non-SIMPLE user databases seem to be slightly better managed. My guess would be this represents databases that have been in the FULL or BULK_LOGGED recovery models, have grown out of control and been switched to SIMPLE, but not cleaned up.

User Databases: Number of VLFs

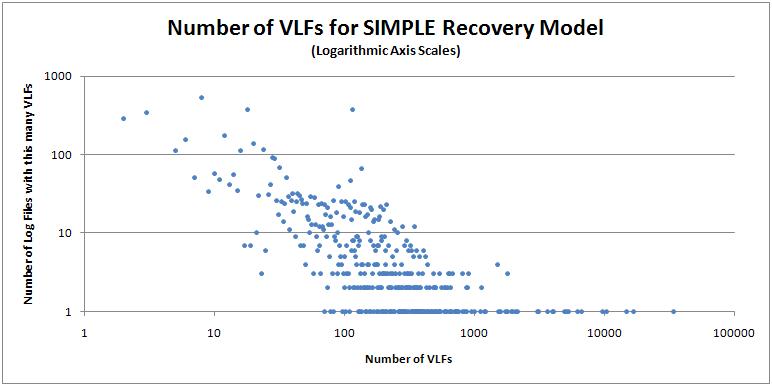

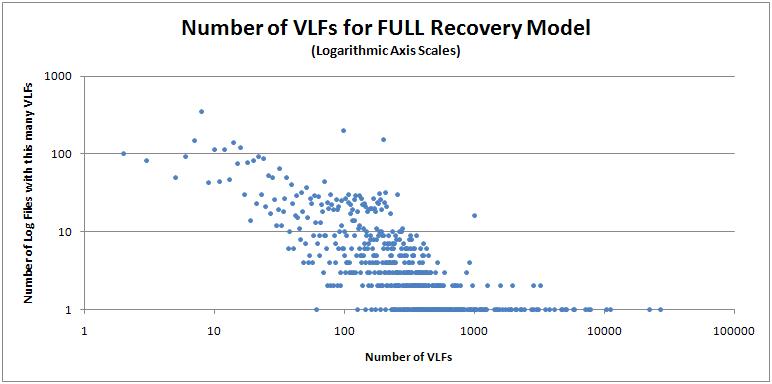

Each plot on the graphs below shows the number of log files with a certain number of VLFs across all the instances.

As with the log file sizes, the distributions of these two graphs look very similar.

76.9% of user databases using the SIMPLE recovery model have less than 100 VLFs, dropping to 67.8% for those not using SIMPLE.

98.4% of user databases using the SIMPLE recovery model have less than 500 VLFs (definitely a point at which maintenance is required), dropping to 97.0% for those not using SIMPLE.

The maximum number of VLFs for the SIMPLE user databases is 34057, and 27093 for those not using SIMPLE.

Given that more user databases using SIMPLE are larger, but have slightly fewer VLFs, I’d guess this indicates that autogrowth of thse databases was slightly higher.

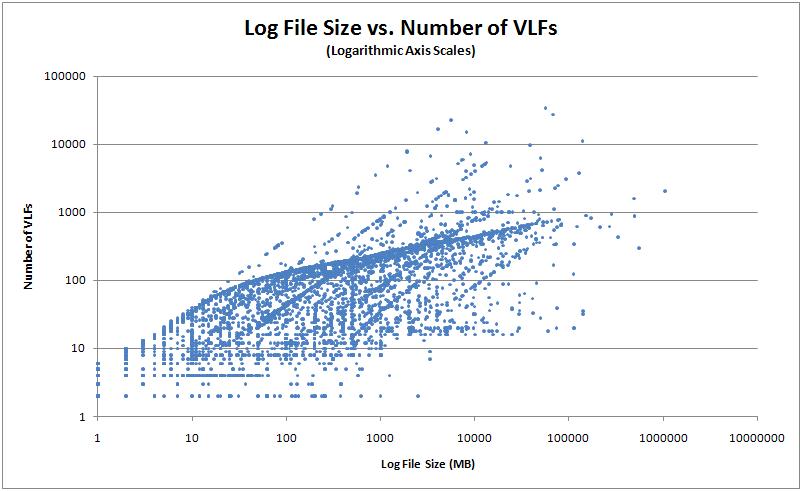

User Databases: Log File Size vs. Number of VLFs

At first glance, the graph below looks confusing. It shows the number of VLFs each log file has compared to its size in MB. If you look at the first few log file sizes, you’ll see that there are multiple points plotted on the Y-axis. This is because there are databases that have the same size log files, but the log files have different numbers of VLFs.

There’s definitely a loose trend-line in the graph, with a knee at around 50MB log file size.

Summary

I’m impressed. 75% of all databases in the sample have less than 100 VLFs, and 97.4% have less than 500 VLFs. I expected the results to be worse, but they seem show that the majority of you are managing your logs well. Now, these results are a bit skewed though because they’re coming from people who read my blog, where I bang on and on about good maintenance and management.

I was very surprised by the 50/50 split of SIMPLE/non-SIMPLE recovery model for user databases. My guess is that more of these *should* be in FULL to comply with business SLAs around RPO/data-loss, but for some reason aren’t, or aren’t any longer because of out-of-control growth issues.

Thanks to everyone who sent me results!

Here are some links for further reading:

- Importance of proper transaction log size management

- TechNet Magazine: Understanding Logging and Recovery in SQL Server

- Transaction Log VLFs – too many or too few?

- 8 Steps to better Transaction Log throughput

- New script: is that database REALLY in the FULL recovery mode?

Enjoy!

9 thoughts on “Log file configuration metrics for 17000 databases”

I think the system database graphs would have been a lot more interesting with a good portion of distribution databases thrown in there.

Also, I suspect the removal of the no_log option for backup log has contributed to the number of databases in simple recovery model.

Hey Robert – interesting points. Unfortunately I couldn’t grab database names. Most of the systems were 2000 or 2005 (don’t have exact numbers) so don’t think NO_LOG being removed in 2008 is a big factor here.

Could it be that so many user databases use SIMPLE recovery because the data came from non-production (e.g. development or QA) environments? People might have started to run your script on production, then on second thought ran it on development instead. I don’t know how common this is, but unless there’s a reason to do otherwise, I keep all development databases in SIMPLE recovery.

@Noel – maybe, but many people said they were giving me production data.

Good point. I stopped using no_log a long time ago, so I sometimes forget that it is still there in 2005.

Great analysis! It would be interesting to see growth settings and patterns survey as well. As usual great job!

In the it’s-nice-to-know-what-our-peers-are-doing context this is interesting. Nothing like a nicely made graph to depict the strong corelation of VLFs to Log Size too.

Thanks for all the hard work Paul.

AJ – can you send me an email please? The discussion we were having isn’t relevant to comments on this post so I’ve removed it. Tried to reply to your comment email but it was a fake one. Thanks

I find your first graph, "Number Of Databases Per Instance", to be quite interesting. While the database industry has been working towards more scalable systems and DB server consolidations for several years, I wonder how many folks are working in earnest to do this within their environments. With all of our vendor supplied DB’s, custom DB’s, and SharePoint, I am supporting 154 databases and growing on one server. (I must admit I still have some single DB server but either the vendor or network security (DMZ) require it). Anyway, I am digressing. During the different stages of sizing, hardware acquisition, server/db configuration I did lots of research. One very common theme in server design (and it has been for ages) is a focus on IO type isolation (logs, DB’s, indexes, tempdb, etc.) That works very well with single purpose servers. However, if you have 300 DB’s spanning 300+ LDF’s, does a dedicated RAID10 array with 300 active log files really get a chance to benefit from all synchronous I/O and reduced disk head travel latency, etc.? I wouldn’t think so…seems pretty chaotic to me. I ended up pooling together all the disks I could afford into one RAID6+1 hot spare pool and bought the largest battery backed write cache I could get. Currently 32 15K SAS drives and 1GB write cache are serviceing all NON SYSTEM DB mdf, ndf, and ldf’s.

Would love to see a future article on optimial IO design for heavy-use, multi-purpose, directly attached storage (no san) based systems.