Over the last month we've been teaching in Europe and I haven't had much time to focus on benchmarking, but I've finally finished the first set of tests and analyzed the results.

You can see my benchmarking hardware setup here, with the addition of the Fusion-io ioDrive Duo 640GB drives that Fusion-io were nice enough to lend me.

In this set of tests I wanted to check three things for a sequential write-only workload (i.e. no reads or updates)

-

Best file layout with the hardware I have available

-

Best way to format the SSDs

-

Whether SSDs give a significant performance gain over SCSI storage

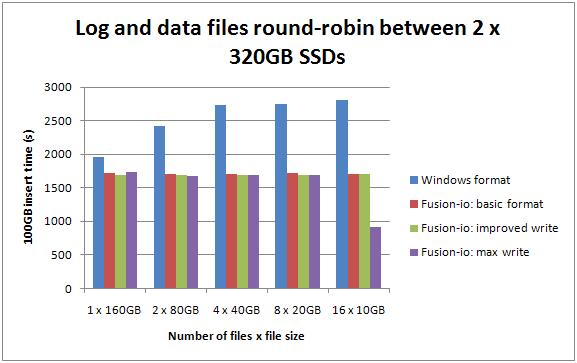

The Fusion-io SSDs can be formatted four ways:

-

Regular Windows format

-

Fusion-io's format

-

Fusion-io's improved write performance format

-

Fusion-io's maximum write performance format

I'm using one of the 640GB SSDs in my server, which presents itself as two 320GB drives that I can use individually or tie together in a RAID array. The actual capacity varies depending on how the drives are formatted:

-

With the Windows and normal Fusion-io format, each of the 320GB drives has 300GB capacity

-

With the improved write performance format, each of the 320GB drives has only 210GB capacity, 70% of normal

-

With the maximum write performance format, each of the 320GB drives has only 151GB capacity, 50% of normal

In my tests, I want to determine whether the loss in capacity is worth it in terms of a performance gain. The SSD format is performed using Fusion-io's ioManager tool, with their latest publicly-released driver (1.2.7.1).

My tests involve 16 connections to the server, running server-side code to insert 6.25GB each into a table with a clustered index, one row per page. The database is 160GB with a variety of file layouts:

-

1 x 160GB file

-

2 x 80GB files

-

4 x 40GB files

-

8 x 20GB files

-

16 x 10GB files

These drop down to 128/64/32/etc when using a single 320GB drive with the maximum write capacity format. The log file is pre-created at 8GB and does not need to grow during the test.

I tested each of the five data file layouts on the following configurations (all using 1MB partition offsets, 64k NTFS allocation unit size, 128k RAID stripe size – where applicable):

-

Data on RAID-10 SCSI (8 x 300GB 15k), log on RAID-10 SATA (8 x 1TB 7.2k)

- Data round-robin between two RAID-10 SCSI (each with 4 x 300GB 15k and one server NIC), log on RAID-10 SATA (8 x 1TB 7.2k)

-

Data on two 320GB SSDs in RAID-0 (each of the 4 ways of formatting), log on RAID-10 SATA (8 x 1TB 7.2k)

-

Log and data on two 320GB SSDs in RAID-0 (each of the 4 ways of formatting)

-

Log and data on single 320GB SSD (each of the 4 ways of formatting)

-

Log and data on separate 320GB SSDs (each of the 4 ways of formatting)

-

Log and data round-robin between two 320GB SSDs (each of the 4 ways of formatting)

That's a total of 22 configurations, with 5 data file layouts in each configuration – making 110 separate configurations. I ran each test 5 times and then took an average of the results – so altogether I ran 550 tests, for a cumulative test time of just less than 110 million seconds (12.7 days) over the last 4 weeks.

And yes, I do have a test harness that automates a lot of this so I only had to reconfigure things 22 times manually. And no, for these tests I didn't have wait stats being captured. I've upgraded the test harness and now it captures wait stats for each test – that'll come in my next post.

On to the results… bear in mind that these results are testing a 100GB sequential insert-only workload and are not using the full size of the disks involved!!!

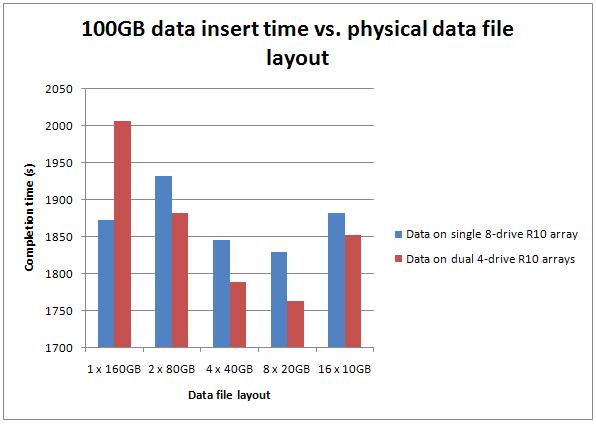

Data on SCSI RAID-10, log on SATA RAID-10

I already blogged about these tests here last week. They prove that for this particular workload, multiple data files on the same RAID array does give a performance boost – albeit only 6%.

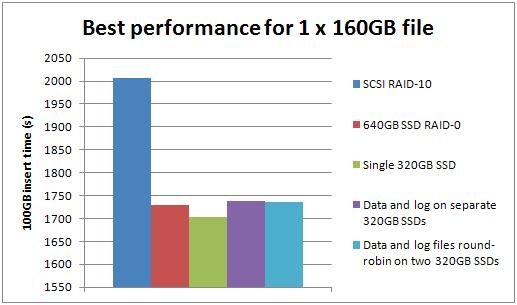

The best performance I could get from the SCSI/SATA configurations was completing the test in 1755 seconds.

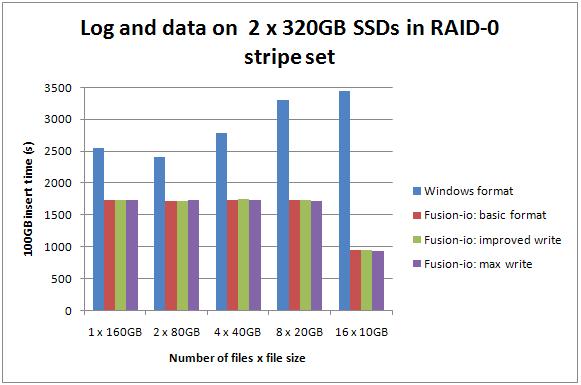

Data and log on 640GB RAID-0 SSDs (Data on 640GB RAID-0 SSDs, log on SATA RAID-10)

The performance whether the log file was on SATA or on the SSD was almost identical, so I'm only including one graph, in the interests in making this post a little shorter.

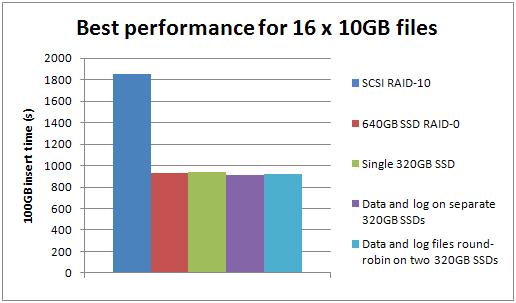

These results clearly show that the SSDs have to be formatted correctly to get any performance out of them. The SSDs performed the same for all data file configurations until performance almost doubles when the number of data files hits 16. I tested 32 and 64 files and didn't get any further increase. My guess here is that I had enough files that when checkpoints or lazywrites occured, the behavior was as if I was doing a random-write workload rather than sequential-write workload.

The best performance I could get here was with 16 files and the maximum-write format when the test completed in 934 seconds, 1.88x faster than the best SCSI time. This is only 13 seconds slower than the normal format which gives 100% more capacity.

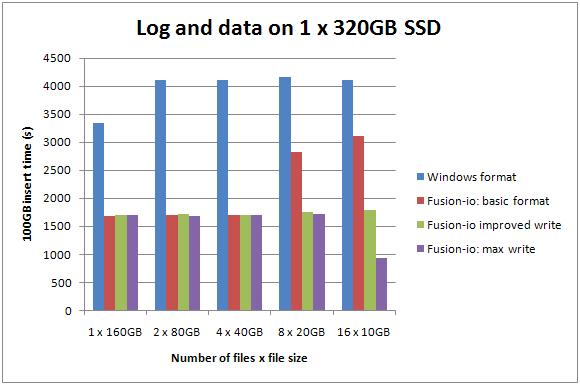

Data and log on single 320GB SSD

Here the performance truly sucked when the SSD wasn't formatted correctly. Once it was, the performance was roughly the same for 1, 2, or 4 files but degraded by almost 50% with normal formatting for 8 or 16 files. With improved-wait and maximum-write formatting, the performance was the same as for the 640GB RAID-0 SSD array, but the sharp performance increase with 16 files only happened with the maximum-write formatting.

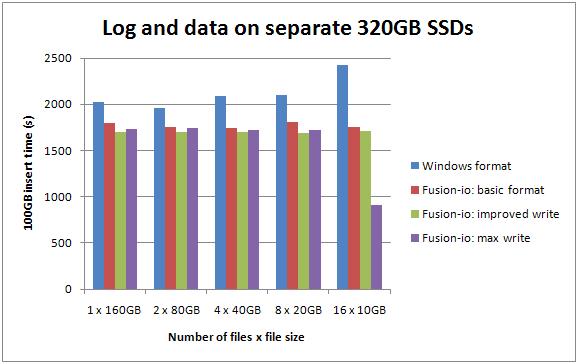

Data and log on separate 320GB SSDs

No major difference here – same characteristics as before when formatted correctly, and the best performance coming from maximum-write formatting and 16 data files.

This configuration gave the best overall performance – 909 seconds – 1.93x the bext performance from the SCSI storage.

Data and log round-robin between separate 320GB SSDs

No major differences from the previous configuration.

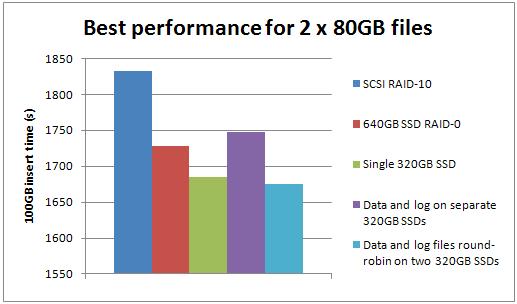

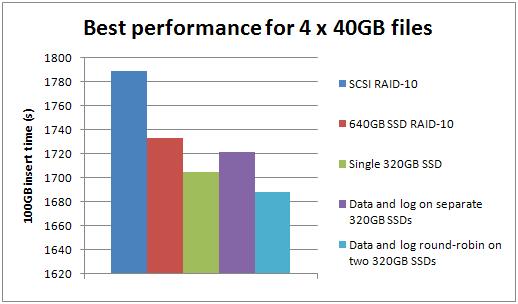

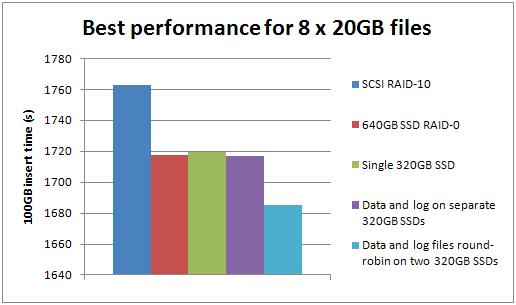

Best-case performance for each number of data files

Clearly the SSDs outperform the SCSI storage for these tests, but not by very much. The improvement factor varied by the number of data files:

- 1: SSD was 1.11x faster than SCSI

- 2: SSD was 1.09x faster than SCSI

- 4: SSD was 1.06x faster than SCSI

- 8: SSD was 1.04x faster than SCSI

- 16: SSD was 2.03x faster than SCSI

The configuration of 16 data files on one SSD and the log on the other SSD, with maximum-write format for both, was the best overall performer, beating the best SCSI configuration (8 data files) by a factor of 1.93.

Summary

Reminder: this test was 100GB of sequential inserts with no reads or updates (i.e. no random IO). It is very important to consider the limited scenario being tested and to draw appropriate conclusions

Several things are clear from these tests:

- The Fusion-io SSDs do not perform well unless they are formatted with Fusion-io's tool, which takes seconds and is very easy. I don't see this as a downside at all, and it makes sense to me.

- For sequential write-only IO workloads, the improved-write and maximum-write SSD formats do not produce a performance gain and so the loss in storage capacity (30% and 50% respectively) is not worth it.

- For sequential write-only IO workloads, the SSDs do not provide a substantial gain over SCSI storage (which is not overloaded).

All three of these results were things I'd heard anecdotally and experienced in ad-hoc tests, but now I have the empirical evidence to be able to state them publicly (and now so do you!).

These tests back-up the assertion I've heard over and over that sequential write-only IO workloads are not the best use-case for SSDs.

One very interesting other result came from these tests – moving to 16 data files changed the characteristics of the test to a more random write-only IO workload, and so the maximum-write format produced a massive performance boost – almost twice the performance of the SCSI storage!

The next set of tests is running right now – 64GB of inserts into a clustered index with a GUID key – random reads and writes in a big way. Early results show the SSDs are *hammering* the performance of the SCSI storage – more in a week or so!

Hope you find these results useful and thanks for reading!

9 thoughts on “Benchmarking: Introducing SSDs (Part 2: sequential inserts)”

"64GB of inserts into a clustered index with a GUID key"

THAT’s the use case that’ll help me most – random reads/writes; we have hundreds of very active connections. I’m waiting anxiously for these results. Thanks, Paul, for your efforts on this.

Also, Paul… the comment on the March post about write saturation reminded me of something. During the FusionIO presentation at SQL Saturday 22, the guys mentioned something about for maximum performance and reliability, the chips are double-allocated (640GB of chips yields 320GB of space for the 320GB unit). They may have meant the "maximum write performance" mode which only gave half the space, but I wonder if on a 320GB unit you’ll see high rates with only a 100GB load because 2/3 of it is free to clean up empty space at its leisure. Maybe a test of 100GB of writes/deletes on a drive that’s already got most of its space used would show its performance when space gets tight,

Nice job on these. I am glad you can run them in a fairly automated fashion, remotely while you are on the road.

Hi Paul.

I think I missed something at the end with your conclusions for comparing SSD to SCSI in the last set of graphs. You state, "Clearly the SSDs outperform the SCSI storage for these tests." But the third point under your summary states that SSDs do *not* provide substantial gain over SCSI for sequential write-only workloads. Were the tests where the SSDs outperformed the SCSI not write-only? The graph states you were inserting 100GB, which seems write-only to me. What am I missing?

Thanks.

Jerry – the SSDs do outperform the SCSI, but only by a small factor – i.e. not substantial. I’ll make it clearer.

What is the block size that the Fusion I/O tool formats the volumes to? Does having the block size be 8k matter with SSD’s?

@Todd – 512bytes. Block size can make a difference but I’m not trying that yet.

Looking forward to the up-coming random tests Paul!

Any chance you could post your FIO-PCI-CHECK.exe output so we can see what chipset, PCI slot speeds and PCI bandwidth is available on your server during the tests?

I’ve seen all sorts of config issues with customers who use FusionIO, which can affect FusionIO performance significantly, so it’d be interesting to see how your PCI environment is configured & whether the card/s have access to full bandwidth..

C:UsersAdministrator>fio-pci-check.exe -v

This utility directly probes the pci configuration ports.

There are possible system stability risks in doing this.

Do you wish to continue [y/n]? y

Root Bridge PCIe 5000 MB/sec needed max

Intel(R) 5000X Chipset Memory Controller Hub – 25C0

Current control settings: 0x080f

Correctable Error Reporting: enabled

Non-Fatal Error Reporting: enabled

Fatal Error Reporting: enabled

Unsupported Request Reporting: enabled

Current status: 0x0000

Correctable Error(s): None

Non-Fatal Error(s): None

Fatal Error(s): None

Unsupported Type(s): None

link_capabilities: 0x001bf441

Maximum link speed: 2.5 Gb/s per lane

Maximum link width: 4 lanes

Current link_status: 0x00003041

Link speed: 2.5 Gb/s per lane

Link width is 4 lanes

Bridge 00:06.00 (0c-10)

Intel(R) 5000 Series Chipset PCI Express x8 Port 6-7 – 25F9

Needed 2000 MB/sec Avail 2000 MB/sec

Current control settings: 0x002f

Correctable Error Reporting: enabled

Non-Fatal Error Reporting: enabled

Fatal Error Reporting: enabled

Unsupported Request Reporting: enabled

Current status: 0x0000

Correctable Error(s): None

Non-Fatal Error(s): None

Fatal Error(s): None

Unsupported Type(s): None

link_capabilities: 0x061bf481

Maximum link speed: 2.5 Gb/s per lane

Maximum link width: 8 lanes

Current link_status: 0x00003081

Link speed: 2.5 Gb/s per lane

Link width is 8 lanes

Bridge 0c:00.00 (0d-10)

PCI Express standard Upstream Switch Port

Needed 2000 MB/sec Avail 2000 MB/sec

Current control settings: 0x002f

Correctable Error Reporting: enabled

Non-Fatal Error Reporting: enabled

Fatal Error Reporting: enabled

Unsupported Request Reporting: enabled

Current status: 0x0009

* Correctable Error(s): Detected

Non-Fatal Error(s): None

Fatal Error(s): None

* Unsupported Type(s): Detected

Clearing Errors

link_capabilities: 0x00015c82

Maximum link speed: 5.0 Gb/s per lane

Maximum link width: 8 lanes

Slot Power limit: 25.0W (25000mw)

Current link_status: 0x00000081

Link speed: 2.5 Gb/s per lane

Link width is 8 lanes

Bridge 0d:05.00 (0f-0f)

PCI Express standard Downstream Switch Port

Needed 1000 MB/sec Avail 1000 MB/sec

Current control settings: 0x002f

Correctable Error Reporting: enabled

Non-Fatal Error Reporting: enabled

Fatal Error Reporting: enabled

Unsupported Request Reporting: enabled

Current status: 0x0009

* Correctable Error(s): Detected

Non-Fatal Error(s): None

Fatal Error(s): None

* Unsupported Type(s): Detected

Clearing Errors

link_capabilities: 0x05395c42

Maximum link speed: 5.0 Gb/s per lane

Maximum link width: 4 lanes

Current link_status: 0x00002041

Link speed: 2.5 Gb/s per lane

Link width is 4 lanes

ioDrive 0f:00.0 Firmware 36867

Fusion ioDrive

Current control settings: 0x213f

Correctable Error Reporting: enabled

Non-Fatal Error Reporting: enabled

Fatal Error Reporting: enabled

Unsupported Request Reporting: enabled

Current status: 0x0000

Correctable Error(s): None

Non-Fatal Error(s): None

Fatal Error(s): None

Unsupported Type(s): None

link_capabilities: 0x0003f441

Maximum link speed: 2.5 Gb/s per lane

Maximum link width: 4 lanes

Slot Power limit: 25.0W (25000mw)

Current link_status: 0x00001041

Link speed: 2.5 Gb/s per lane

Link width is 4 lanes

Current link_control: 0x00000000

Not modifying link enabled state

Not forcing retrain of link

Bridge 0d:06.00 (10-10)

PCI Express standard Downstream Switch Port

Needed 1000 MB/sec Avail 1000 MB/sec

Current control settings: 0x002f

Correctable Error Reporting: enabled

Non-Fatal Error Reporting: enabled

Fatal Error Reporting: enabled

Unsupported Request Reporting: enabled

Current status: 0x0009

* Correctable Error(s): Detected

Non-Fatal Error(s): None

Fatal Error(s): None

* Unsupported Type(s): Detected

Clearing Errors

link_capabilities: 0x06395c42

Maximum link speed: 5.0 Gb/s per lane

Maximum link width: 4 lanes

Current link_status: 0x00002041

Link speed: 2.5 Gb/s per lane

Link width is 4 lanes

ioDrive 10:00.0 Firmware 36867

Fusion ioDrive

Current control settings: 0x213f

Correctable Error Reporting: enabled

Non-Fatal Error Reporting: enabled

Fatal Error Reporting: enabled

Unsupported Request Reporting: enabled

Current status: 0x0000

Correctable Error(s): None

Non-Fatal Error(s): None

Fatal Error(s): None

Unsupported Type(s): None

link_capabilities: 0x0003f441

Maximum link speed: 2.5 Gb/s per lane

Maximum link width: 4 lanes

Slot Power limit: 25.0W (25000mw)

Current link_status: 0x00001041

Link speed: 2.5 Gb/s per lane

Link width is 4 lanes

Current link_control: 0x00000000

Not modifying link enabled state

Not forcing retrain of link

C:UsersAdministrator>