I recently posted an article at sqlperformance.com about the importance of selecting the proper size Azure VM because of limits placed on throughput based on the VM size. I was sharing this article during my “Introduction to Azure Infrastructure as a Service” session during SQLintersection when Milan Ristovic asked how best to calculate throughput. I told Milan and the audience how I do it and that I would write a quick blog post about it. Thanks for the inspiration to write another blog Milan!

Most data professionals are familiar with collecting latency information using sys.dm_io_virtual_file_stats or by using Paul Randal’s script on his “Capturing IO latencies for a period of time” that uses sys.dm_io_virtual_file_stats and captures a snapshot, uses a wait for delay, captures another snapshot and computes the differences. Capturing latency information lets you know the time delay between a request for data and the return of the data.

I’ve been using Paul’s script from his blog post for over 5 years to determine disk latency. A few years ago I made a few changes to also calculate disk throughput. This is important to know for migrations to different storage arrays and especially when moving to the cloud. If you simply benchmark your existing storage, that is telling you what your storage subsystem is capable of. That is great information to have, however, you also need to know what your workload is currently using. Just because your on-premises storage supports 16,000 IOPS and 1000MB of throughput, you probably aren’t consuming that many resources. Your workload may only be consuming 70MB of throughput during peak times. You need a baseline to know what your actual needs are.

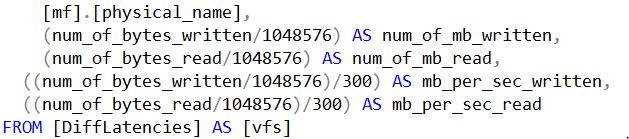

When I’m capturing latency and throughput data, I like to capture small increments. Paul’s script defaults to 30 minutes, I like 5 minute segments to have a more granular view. What I’ve added in Paul’s script to capture throughput is to capture the num_of_bytes_written and divide by 1,048,576 (1024 bytes * 1024) to calculate the MB value. I do the same for num_of_bytes_read. I also do the same again and divide by the number of seconds I am waiting between the two snapshots. In this case since I am waiting 5 minutes, I’ll use 300, for example: (num_of_bytes_written/1,048,576)/300 AS mb_per_sec_written.

I add my changes at the end of Paul’s script before the final FROM statement, just after [mf].[physical_name]

With these changes I can easily see how many MB the workload is reading and writing to disk during the capture time, as well as the MB/s to tell me the overall throughput.

I hope this helps you to baseline your existing SQL Server workload. For my customers, I’ve further modified this script to write the results to a table with a date and time stamp so I can better analyze the workload.