In my years working with SQL Server, I’ve found there are a few topics that are often ignored. Ignored because people fear them; they think they’re harder than they actually are OR they think they’re not important. Sometimes they even think – I don’t need to know that because SQL Server “does it for me.” I’ve heard this about indexes. I’ve heard this about statistics.

So, let’s talk about why statistics are so important and why a minimal amount of knowledge of their importance can make a HUGE difference to your query’s performance.

There are SOME aspects to statistics that ARE handled automatically:

- SQL Server automatically creates statistics for indexes

- SQL Server automatically creates statistics for columns – when SQL Server NEEDS more information to optimize your query

- IMPORTANT: This only occurs when the database option: auto_create_statistics is ON; this option is on by default in ALL versions/editions of SQL Server. However, there are some products/guidance out there that recommends that you turn this off. I completely DISAGREE with this.

- SQL Server automatically updates the statistical information kept for indexes/columns for a statistic that’s been “invalidated” – when SQL Server NEEDS it to optimize your query

- IMPORTANT: This only occurs when the database option: auto_update_statistics is ON; this option is on by default in ALL versions/editions of SQL Server. However, there are some products/guidance out there that recommends that you turn this off. I mostly DISAGREE with this. If you want to read a bit more about this – check out Erin’s SQL101 post: SQLskills SQL101: Updating SQL Server Statistics Part I – Automatic Updates and why it’s generally better to update statistics manually (but through a more selective/automated process) here: SQLskills SQL101: Updating SQL Server Statistics Part II – Scheduled Updates

However, it’s important to know that while some aspects of statistics ARE handled automatically; they’re not perfect. And, SQL Server often needs some with certain data patterns and data sets – to really optimize effectively. To understand this, I want to talk a bit about accessing data and the process of optimization.

Accessing Data

When you submit a request to SQL Server to access data, you usually write a Transact-SQL statement – in the form of an actual SELECT or possibly execute a stored procedure (and, yes, there are other options). However, the main point is that you’re requesting a data set – not HOW that data set should be found/processed. So, how does SQL Server “get” to the data?

Processing Data

Statistics help SQL Server with the gathering / processing of the data. They help by giving SQL Server insight into how much data will be accessed. If a small amount of data is going to be accessed then the processing might be easier (and require a different process) than if the query were to process millions of rows.

Specifically, SQL Server uses a cost-based optimizer. There are other options for optimization but cost-based optimizers tend to be the most commonly used today. Why? Cost-based optimizers use specific information about the data – to come up with more effective, more optimal, and more focused plans – specific to the data. Generally, this process is ideal. However, with

plans that are saved for subsequent executions (cached plans), it might not always be ideal. However, most other options for optimization can suffer from even worse problems.

IMPORTANT: I’m not talking about cached plans here… I’m talking about the initial process of optimization and how SQL Server determines how much data will be accessed. The subsequent

execution of a cached plan brings additional potential problems with it (known as parameter sniffing problems or parameter-sensitive plan problems). I talk a lot about that in other posts.

To explain cost-based optimization, let me cover other possible forms of optimization; this will help to explain a lot of the benefits of cost-based optimization.

Syntax-based Optimization

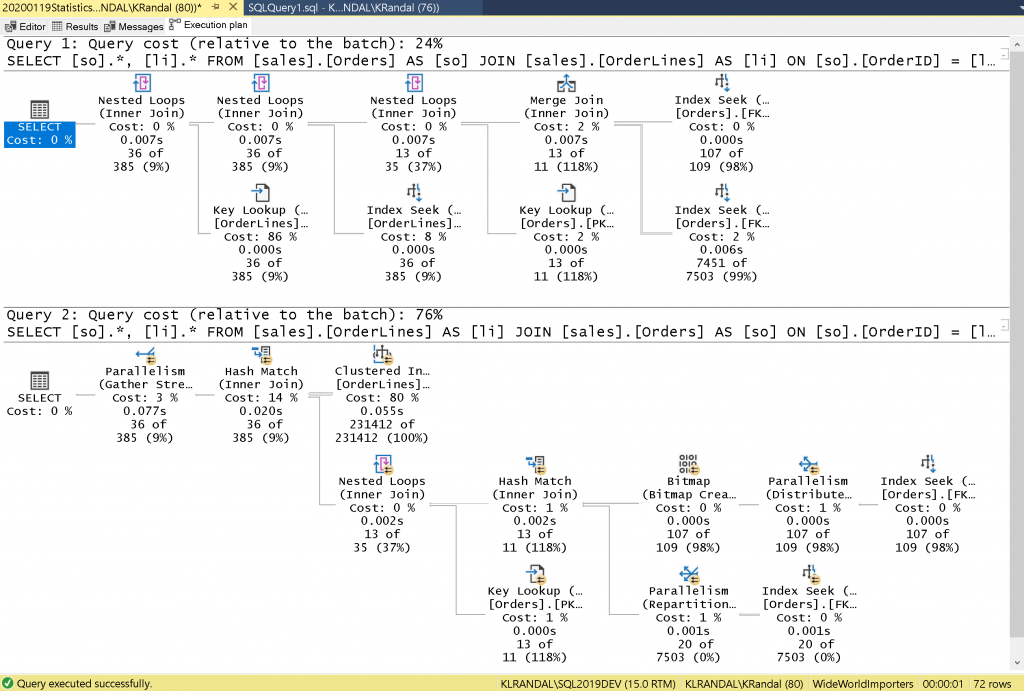

SQL Server could use just your syntax to process your query. Instead of taking time to determine the best possible processing order for your tables, an optimizer could just join your tables in the order they’re listed in your FROM clause. While it might be fast to START this process; the actual gathering of the data might not be ideal. In general, joining larger tables to smaller tables is a lot less optimal than joining smaller tables to larger. To give you a quick view of this, check out these two statements:

USE [WideWorldImporters];

GO

SET STATISTICS IO ON;

GO

SELECT [so]., [li].

FROM [sales].[Orders] AS [so]

JOIN [sales].[OrderLines] AS [li]

ON [so].[OrderID] = [li].[OrderID]

WHERE [so].[CustomerID] = 832 AND [so].[SalespersonPersonID] = 2

OPTION (FORCE ORDER);

GO

SELECT [so]., [li].

FROM [sales].[OrderLines] AS [li]

JOIN [sales].[Orders] AS [so]

ON [so].[OrderID] = [li].[OrderID]

WHERE [so].[CustomerID] = 832 AND [so].[SalespersonPersonID] = 2

OPTION (FORCE ORDER);

GO

Review the cost of the two plans – side-by-side, you can see that the second is more expensive.

Yes, this is an oversimplification of join optimization techniques but the point is that it’s unlikely that the order you’ve listed your tables in the FROM clause is the most optimal. The good news though – if you’re having performance problems, you CAN force syntax-based optimization techniques such joining in the order as your tables are listed (as in the example). And, there are quite a few other possible “hints” that you can use:

- QUERY hints (forcing level of parallelism [MAXDOP], forcing SQL Server to optimize for the FIRST row – not the overall set with FAST n, etc…)

- TABLE hints (forcing an index to be used [INDEX], forcing seeks, forcing an index join [index intersection])

- JOIN hints (LOOP / MERGE / HASH)

Having said that, these should ALWAYS be a last resort IMO. I would try quite a few other things before I’d FORCE anything in production code. By forcing one (or, more) of these hints, you don’t allow SQL Server to further optimize your queries as you add/update indexes, as you add/update statistics, or as you update/upgrade SQL Server. Unless, of course, you’ve documented these hints and you test to make sure they’re still the best option for your query after maintenance routines, routinely after data modifications, and after updates/upgrades to SQL Server. To be honest, I don’t see this done as often as it should be. Most hints are added as if they’re perfect and left until later problems come up – even when they’re possibly floundering inefficiently for days, weeks, months, etc…

(sorry, I had to… it was my photo post for Sunday – so perfect!)

It’s always important to see if there’s something ELSE that you can do to get better performance BEFORE FORCING a query to do ONE THING. Yes, forcing might be easy NOW but a small amount of time checking some of these other things might be well worth it in the long run.

For plan problems that are specifically from THIS query and THESE values (not from the execution of a cached plan), some things I might try are:

- Are the statistics accurate / up-to-date? Does updating the statistics fix the issue?

- Are the statistics based on a sampling? Does FULLSCAN fix the issue?

- Can you re-write the query and get a better plan?

- Are there any search arguments that aren’t well-formed? Columns should always be isolated to one side of an expression…

- WRITE: MonthlySalary > expression / 12

- NOT: MonthlySalary * 12 > expression

- Are there any transitivity issues you can help SQL Server with? This is an interesting one and becomes more problematic across more and more tables. However, adding a seemingly redundant condition can help the optimizer to do something it hadn’t considered with the original query (see, the added condition below):

- Are there any search arguments that aren’t well-formed? Columns should always be isolated to one side of an expression…

FROM table2 AS t1

JOIN table2 AS t2

ON t1.colX = t2.colX

WHERE t1.colX = 12 AND t2.colX = 12

- Sometimes just changing from a join to a subquery or a subquery to a join – fixes the issue (no, one isn’t specifically/always better than the other but sometimes the re-write can help the optimizer to see something it didn’t see with the original version of the query)

- Sometimes using derived tables (a join of specific tables in the FROM clause AS J1) can help the optimizer to more optimally join tables

- Are there any OR conditions? Can you re-write to UNION or UNION ALL to get the same result (this is the most important) AND get a better plan? Be careful though, these are semantically NOT the same query. You’ll need to understand the differences between all of these before you move to a different query.

- OR removes duplicate rows (based on the row ID)

- UNION removes duplicates based on JUST the SELECT LIST

- UNION ALL concatenates sets (which can be A LOT faster than having to remove duplicates) but, this might / might not be an issue:

- Sometimes there are NO duplicates (KNOW your data)

- Or, sometimes it might be OK to return duplicates (KNOW your user/audience/application)

This is a short list but might help you get better performance WITHOUT using hints/forcing. That means, as subsequent changes occur (to the data, to the indexes/statistics, to the SQL Server version) you’ll also be able to benefit from those changes!

But, the coolest part, is that these hints are there – IF we do have to use them! And, there ARE cases where the optimizer might not be able to come up with the most efficient plan; for those cases, you can force the process. So, yes, I love that they’re there. I just don’t want you to use them when you really haven’t determined what the actual root cause of the problem is…

So, yes, you can achieve syntax-based optimization…IFF you NEED it.

Rules-based Optimization (Heuristics)

I mentioned that cost-based optimization requires statistics. But what if you don’t have statistics?

Or, maybe a different way to look at it – why can’t SQL Server just use a “bunch of rules” to more-quickly optimize your queries without having to look at / analyze specific information about your data? Wouldn’t that be faster? SQL Server can do this – but, it’s often FAR from ideal. The best way to show you this is to NOT allow SQL Server to use statistics to process a query. I can show you a case where it actually works well and a MUCH MORE likely case where it doesn’t.

Heuristics are rules. Simple, STATIC / FIXED calculations. The fact that they’re simple is their benefit. No data to look at. Just a quick estimate based on the predicate. For example, less than and greater than have an internal rule of 30%. Simply put, when you run a query with a less than or greater than predicate and NO INFORMATION is known about the data in that column / predicate, SQL Server is going to use a RULE that 30% of the data will match. They’ll use this in their estimate / calculations and come up with a plan tied to this rule.

To get this to “work,” I have to first disable auto_create_statistics and check to see if there’s anything there (already) that could possibly help my query:

USE [WideWorldImporters];

GO

ALTER DATABASE [WideWorldImporters]

SET AUTO_CREATE_STATISTICS OFF;

GO

EXEC sp_helpindex ‘[sales].[Customers]’;

EXEC sp_helpstats ‘[sales].[Customers]’, ‘all’;

GO

Check the output from sp_helpindex and sp_helpstats. By default, WideWorldImporters does not have any indexes or statistics that lead with DeliveryPostalCode (or even have it as a member). If you’ve added one (or, SQL Server has auto-created one), you must drop it before running these next code samples.

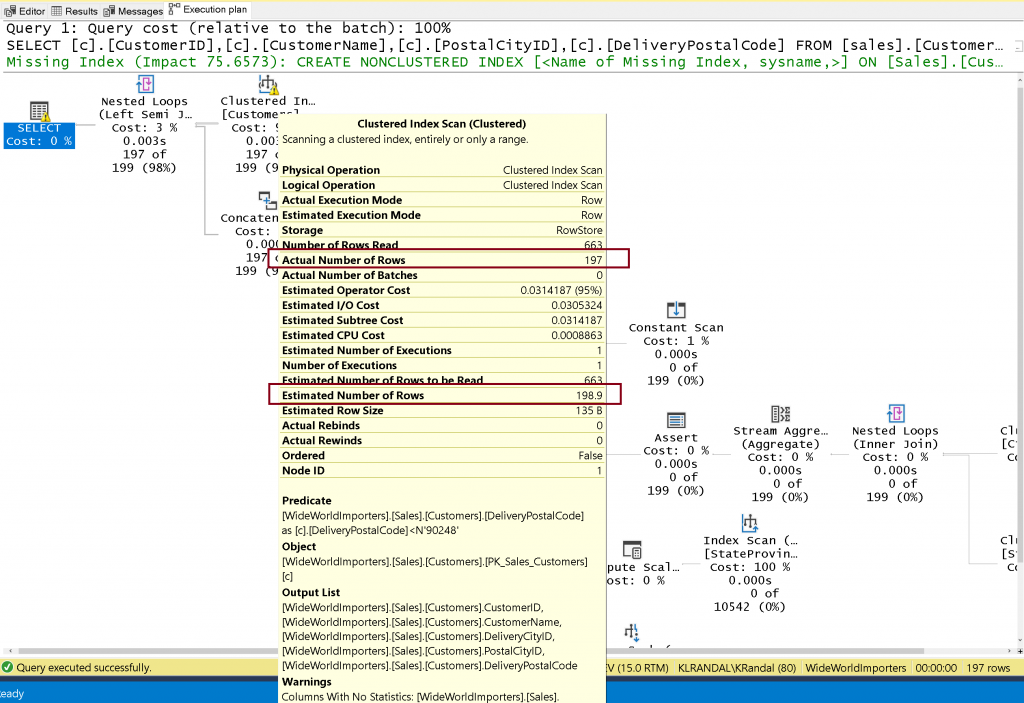

For this first query, we’ll supply a zip code of 90248. The query is using a less than predicate. Without any statistics and without the ability to auto-create any, what does SQL Server estimate for the number of rows?

Without engineering a “PERFECT” value, they’re going to be WRONG… most of the time!

For this first query, the estimate (30% of 663 = 198.9) works well, as the actual number of rows for the query is 197. One additional item to notice, there’s a warning symbol next to the table (Customers) as well as the left-most SELECT operator. These also tell you that something’s wrong. But, here – the estimate is “correct.”

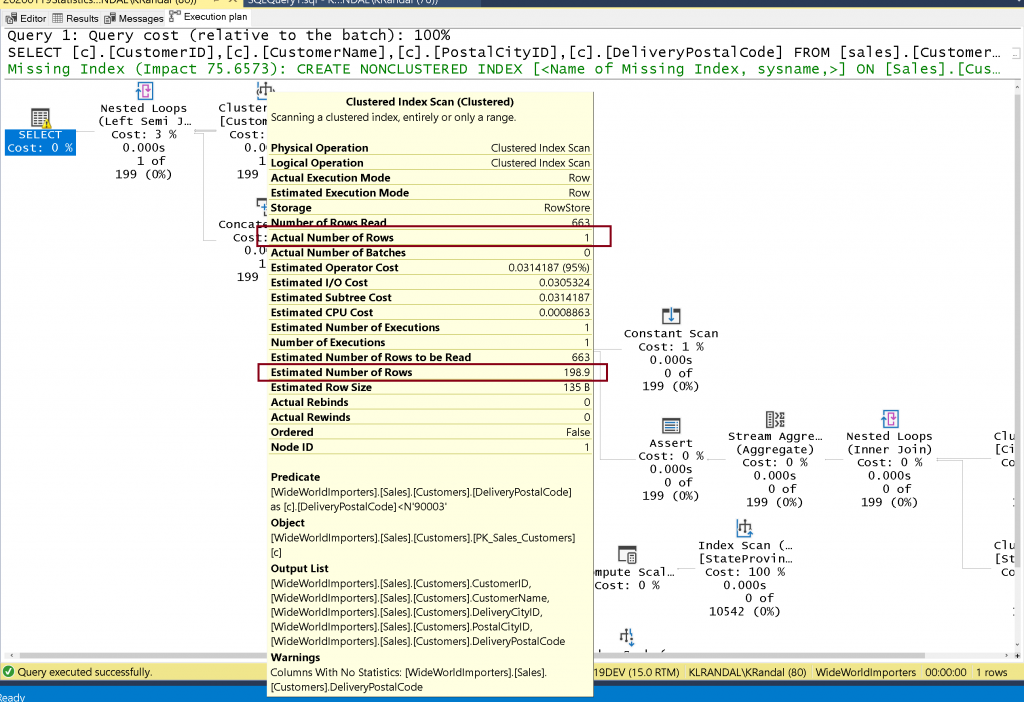

For this second query, we’ll supply a zip code of 90003. The query is the exact same except for the value of the zipcode. Without any statistics and without the ability to auto-create any, what does SQL Server estimate for the number of rows?

SELECT [c1].[CustomerID],

[c1].[CustomerName],

[c1].[PostalCityID],

[c1].[DeliveryPostalCode]

FROM [sales].[Customers] AS [c1]

WHERE [c1].[DeliveryPostalCode] < ‘90003’;

For the second query, the estimate is again 198.9 but the actual is only 1. Why? Because without statistics, the heuristic for less than (and, greater than) is 30%. Thirty perfect of 663 is 198.9. This will change as the data changes but the percent for this predicate is fixed at 30%.

And, if this query were more complicated – with joins and/or additional predicates – having incorrect information is ALREADY a problem for the subsequent steps of optimization. Yes, you might occasionally get lucky and use a value that loosely matches the heuristic but MORE LIKELY, you won’t. And, while the heuristic for less than and greater than is 30%, it’s different for BETWEEN and different again for equality. In fact, some even change based on the cardinality estimation model you’re using (for example, equality (=) has changed). Do I even care? Actually, not really! I don’t really ever WANT to use them.

So, yes, SQL Server can use rules-based optimization…but, only when it doesn’t have better information.

I don’t want heuristics; I want statistics!

Statistics are one of the FEW places in SQL Server where having MORE of them can be beneficial. No, I’m not saying to create one for every column of your table but there are some cases where I might pre-create statistics. OK, that’s a post for another day!

So, WHY statistics are important for cost-based optimization?

Cost-based Optimization

What does cost-based optimization actually do? Simply put, SQL Server QUICKLY gets a rough estimate of how much data will be processed. Then, using this information, it estimates the costs of different possible algorithms that could be used to access the data. Then, based on the “costs” of these algorithms, SQL Server chooses the one that it’s calculated to be the least expensive. Then, it compiles it and executes it.

This sounds great; but there are a lot of factors as to why this may or may not work well.

Most importantly, the base information used to perform the estimations (statistics) can be flawed.

- They could be out of date

- They could be less accurate due to sampling

- They could be less accurate because of the table size and the limitations of statistics “blob” that SQL Server creates

Some people ask me – couldn’t SQL Server have more detailed statistics (larger histograms, etc.). Yes, they could. But, then the process of reading/accessing these larger and larger statistics would start to get more and more expensive (and take more time, more cache, etc.). Which would – in turn – make the process of optimization more and more expensive. It’s a difficult problem; there are pros and cons / trade-offs everywhere. Really, it’s not quite as simple as just “larger histograms.”

Finally, the process of optimization itself can’t possibly EVERY combination for execution / processing, can it? No. That would make the sheer process of optimization so expensive that it would be prohibitive!

In Summary:

The best way to think about the process of optimization is that SQL Server MUST find a “good plan fast.” Otherwise, the process of optimization would become so complicated and take so long, it would defeat its own purpose!

So, why are statistics soooooo important:

- They feed into the whole process of cost-based optimization (and, you WANT cost-based optimization)

- They need to exist for optimization otherwise you’ll be forced to use heuristics (yuck)

- IMO – generally, I HIGHLY RECOMMEND auto_create_statistics to be ON, if you’ve turned it off

- They need to be reasonably accurate to be give better estimates (keeping them up to date is important but some statistics might need more “help” than others staying up to date)

- They might need even further help (with HOW they’re updated, WHEN they’re updated, or even with ADDITIONAL statistics)

STATISTICS ARE THE KEY TO BETTER OPTIMIZATION AND THEREFORE BETTER PERFORMANCE!

(but, they’re far from perfect!)

I hope that motivates you to consider learning a bit more about statistics. They’re actually easier than you might think AND (obviously) they’re really, really important!

Where can you get more information?

Whitepaper: There’s a VERY dated, but still has some great concepts, whitepaper on Statistics here: Statistics Used by the Query Optimizer in Microsoft SQL Server 2008

Online Training with Pluralsight: I don’t [yet] have a course just on statistics but – out of necessity – I talk about them in some of my other courses. This course: SQL Server: Optimizing Ad Hoc Statement Performance has the best discussion. Specifically, be sure to review Module 3: Estimates and Selectivity.

TIP: If you’re not a Pluralsight subscriber, shoot an email to Paul at SQLskills dot com and tell him I sent you there for a PS code. That will give you 30 days to check out our SQLskills content on Pluralsight!

In-Person Events: And, we have some in-person events coming up where you can certainly learn a lot more about SQL Server, and specifically statistics:

SQLBits in London – March 31-April 4, 2020

My FULL-DAY workshop: Statistics for Performance: Internals, Analysis, Problem Solving

SQLintersection in Orlando, FL – April 5-10, 2020

My 60 minute conference session: Statistics: Internals, Analysis, and Solutions

SQLskills Immersion Events

IEPTO1 has a few modules (2+ DAYS) related to indexing and statistics!

I HIGHLY recommend that you #NeverStopLearning and that you access as many different mediums / learning paths as possible. This stuff isn’t simple and it takes time to really digest it and LEARN it.

Thanks for reading!

-k

One thought on “SQLskills SQL101: Why are Statistics so Important?”